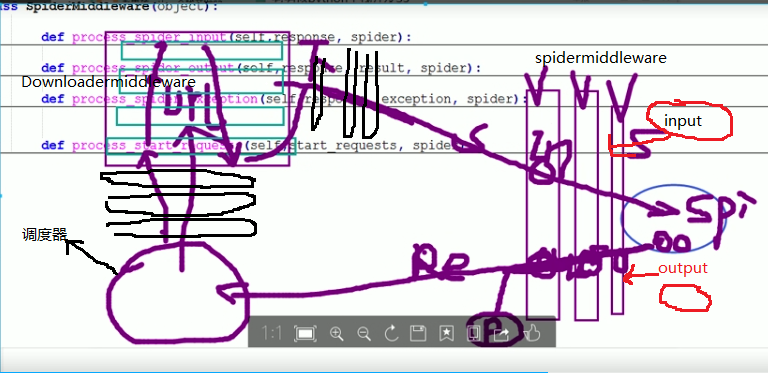

一.下载中间件(DownMiddleware)

三种方法:

1 process_request(self, request, spider) 2 3 process_response(self, request, response, spider) 4 5 process_exception(self, request, exception, spider)

详细:

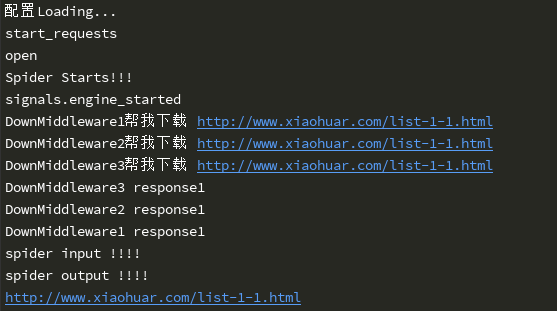

1 class DownMiddleware1(object): 2 #第①执行 3 def process_request(self, request, spider): 4 ''' 5 请求需要被下载时,经过所有下载器中间件的process_request调用 6 :param request: 7 :param spider: 8 :return: 9 None,继续后续中间件去下载; 10 Response对象,停止process_request的执行,开始执行process_response 11 Request对象,停止中间件的执行,将Request重新调度器 12 raise IgnoreRequest异常,停止process_request的执行,开始执行process_exception 13 ''' 14 print('DownMiddleware1帮我下载',request.url) 15 16 # 第②执行 17 def process_response(self, request, response, spider): 18 ''' 19 spider处理完成,返回时调用 20 :param response: 21 :param result: 22 :param spider: 23 :return: 24 Response 对象:转交给其他中间件process_response 25 Request 对象:停止中间件,request会被重新调度下载 26 raise IgnoreRequest 异常:调用Request.errback 27 ''' 28 print('DownMiddleware1 response1') 29 return response 30 31 def process_exception(self, request, exception, spider): 32 ''' 33 当下载处理器(download handler)或 process_request() (下载中间件)抛出异常 34 :param response: 35 :param exception: 36 :param spider: 37 :return: 38 None:继续交给后续中间件处理异常; 39 Response对象:停止后续process_exception方法 40 Request对象:停止中间件,request将会被重新调用下载 41 ''' 42 return None 43 44 class DownMiddleware2(object): 45 def process_request(self, request, spider): 46 ''' 47 请求需要被下载时,经过所有下载器中间件的process_request调用 48 :param request: 49 :param spider: 50 :return: 51 None,继续后续中间件去下载; 52 Response对象,停止process_request的执行,开始执行process_response 53 Request对象,停止中间件的执行,将Request重新调度器 54 raise IgnoreRequest异常,停止process_request的执行,开始执行process_exception 55 ''' 56 print('DownMiddleware2帮我下载',request.url) 57 58 def process_response(self, request, response, spider): 59 ''' 60 spider处理完成,返回时调用 61 :param response: 62 :param result: 63 :param spider: 64 :return: 65 Response 对象:转交给其他中间件process_response 66 Request 对象:停止中间件,request会被重新调度下载 67 raise IgnoreRequest 异常:调用Request.errback 68 ''' 69 print('DownMiddleware2 response1') 70 return response 71 72 def process_exception(self, request, exception, spider): 73 ''' 74 当下载处理器(download handler)或 process_request() (下载中间件)抛出异常 75 :param response: 76 :param exception: 77 :param spider: 78 :return: 79 None:继续交给后续中间件处理异常; 80 Response对象:停止后续process_exception方法 81 Request对象:停止中间件,request将会被重新调用下载 82 ''' 83 return None 84 85 class DownMiddleware3(object): 86 def process_request(self, request, spider): 87 ''' 88 请求需要被下载时,经过所有下载器中间件的process_request调用 89 :param request: 90 :param spider: 91 :return: 92 None,继续后续中间件去下载; 93 Response对象,停止process_request的执行,开始执行process_response 94 Request对象,停止中间件的执行,将Request重新调度器 95 raise IgnoreRequest异常,停止process_request的执行,开始执行process_exception 96 ''' 97 print('DownMiddleware3帮我下载',request.url) 98 99 def process_response(self, request, response, spider): 100 ''' 101 spider处理完成,返回时调用 102 :param response: 103 :param result: 104 :param spider: 105 :return: 106 Response 对象:转交给其他中间件process_response 107 Request 对象:停止中间件,request会被重新调度下载 108 raise IgnoreRequest 异常:调用Request.errback 109 ''' 110 print('DownMiddleware3 response1') 111 return response 112 113 def process_exception(self, request, exception, spider): 114 ''' 115 当下载处理器(download handler)或 process_request() (下载中间件)抛出异常 116 :param response: 117 :param exception: 118 :param spider: 119 :return: 120 None:继续交给后续中间件处理异常; 121 Response对象:停止后续process_exception方法 122 Request对象:停止中间件,request将会被重新调用下载 123 ''' 124 return None

1 # Enable or disable downloader middlewares 2 # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html 3 DOWNLOADER_MIDDLEWARES = { 4 'test002.middlewares.DownMiddleware1': 100, 5 'test002.middlewares.DownMiddleware2': 200, 6 'test002.middlewares.DownMiddleware3': 300, 7 8 }

执行效果:

源码:

# from scrapy.core.downloader import middleware 查看源码格式

1 """ 2 Downloader Middleware manager 3 4 See documentation in docs/topics/downloader-middleware.rst 5 """ 6 import six 7 8 from twisted.internet import defer 9 10 from scrapy.http import Request, Response 11 from scrapy.middleware import MiddlewareManager 12 from scrapy.utils.defer import mustbe_deferred 13 from scrapy.utils.conf import build_component_list 14 15 16 class DownloaderMiddlewareManager(MiddlewareManager): 17 18 component_name = 'downloader middleware' 19 20 @classmethod 21 def _get_mwlist_from_settings(cls, settings): 22 return build_component_list( 23 settings.getwithbase('DOWNLOADER_MIDDLEWARES')) 24 25 def _add_middleware(self, mw): 26 if hasattr(mw, 'process_request'): 27 self.methods['process_request'].append(mw.process_request) 28 if hasattr(mw, 'process_response'): 29 self.methods['process_response'].insert(0, mw.process_response) 30 if hasattr(mw, 'process_exception'): 31 self.methods['process_exception'].insert(0, mw.process_exception) 32 33 def download(self, download_func, request, spider): 34 @defer.inlineCallbacks 35 def process_request(request): 36 for method in self.methods['process_request']: 37 response = yield method(request=request, spider=spider) 38 assert response is None or isinstance(response, (Response, Request)), \ 39 'Middleware %s.process_request must return None, Response or Request, got %s' % \ 40 (six.get_method_self(method).__class__.__name__, response.__class__.__name__) 41 if response: 42 defer.returnValue(response) 43 defer.returnValue((yield download_func(request=request,spider=spider))) 44 45 @defer.inlineCallbacks 46 def process_response(response): 47 assert response is not None, 'Received None in process_response' 48 if isinstance(response, Request): 49 defer.returnValue(response) 50 51 for method in self.methods['process_response']: 52 response = yield method(request=request, response=response, 53 spider=spider) 54 assert isinstance(response, (Response, Request)), \ 55 'Middleware %s.process_response must return Response or Request, got %s' % \ 56 (six.get_method_self(method).__class__.__name__, type(response)) 57 if isinstance(response, Request): 58 defer.returnValue(response) 59 defer.returnValue(response) 60 61 @defer.inlineCallbacks 62 def process_exception(_failure): 63 exception = _failure.value 64 for method in self.methods['process_exception']: 65 response = yield method(request=request, exception=exception, 66 spider=spider) 67 assert response is None or isinstance(response, (Response, Request)), \ 68 'Middleware %s.process_exception must return None, Response or Request, got %s' % \ 69 (six.get_method_self(method).__class__.__name__, type(response)) 70 if response: 71 defer.returnValue(response) 72 defer.returnValue(_failure) 73 74 deferred = mustbe_deferred(process_request, request) 75 deferred.addErrback(process_exception) 76 deferred.addCallback(process_response) 77 return deferred

默认下载中间件:

1 默认下载中间件 2 { 3 'scrapy.contrib.downloadermiddleware.robotstxt.RobotsTxtMiddleware': 100, 4 'scrapy.contrib.downloadermiddleware.httpauth.HttpAuthMiddleware': 300, 5 'scrapy.contrib.downloadermiddleware.downloadtimeout.DownloadTimeoutMiddleware': 350, 6 'scrapy.contrib.downloadermiddleware.useragent.UserAgentMiddleware': 400, 7 'scrapy.contrib.downloadermiddleware.retry.RetryMiddleware': 500, 8 'scrapy.contrib.downloadermiddleware.defaultheaders.DefaultHeadersMiddleware': 550, 9 'scrapy.contrib.downloadermiddleware.redirect.MetaRefreshMiddleware': 580, 10 'scrapy.contrib.downloadermiddleware.httpcompression.HttpCompressionMiddleware': 590, 11 'scrapy.contrib.downloadermiddleware.redirect.RedirectMiddleware': 600, 12 'scrapy.contrib.downloadermiddleware.cookies.CookiesMiddleware': 700, 13 'scrapy.contrib.downloadermiddleware.httpproxy.HttpProxyMiddleware': 750, 14 'scrapy.contrib.downloadermiddleware.chunked.ChunkedTransferMiddleware': 830, 15 'scrapy.contrib.downloadermiddleware.stats.DownloaderStats': 850, 16 'scrapy.contrib.downloadermiddleware.httpcache.HttpCacheMiddleware': 900, 17 }

二.爬虫中间件(SpiderMiddleware)

四种方法:

1 process_spider_input(self, response, spider) 2 3 process_spider_output(self, response, result, spider) 4 5 process_spider_exception(self, response, exception, spider) 6 7 process_start_requests(self, start_requests, spider)

详细:

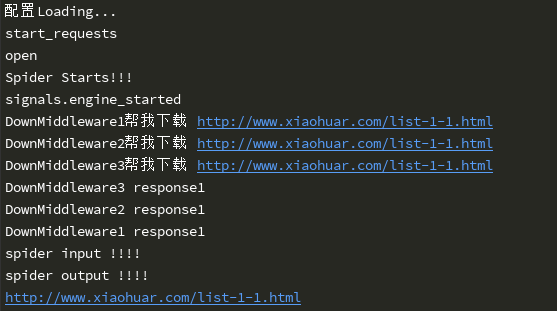

1 class SpiderMiddleware(object): 2 3 def process_spider_input(self, response, spider): 4 ''' 5 DownloaderMiddleware下载完成,执行,然后将response交给parse处理 ->>注意:parse之前执行 6 :param response: 7 :param spider: 8 :return: 9 ''' 10 print("spider input !!!!") 11 pass 12 13 def process_spider_output(self, response, result, spider): 14 ''' 15 spider处理完成,返回时调用 ,返回parse方法中yield的结果 16 :param response: 17 :param result: 18 :param spider: 19 :return: 必须返回包含 Request 或 Item 对象的可迭代对象(iterable) 20 ''' 21 print("spider output !!!!") 22 return result 23 24 def process_spider_exception(self, response, exception, spider): 25 ''' 26 异常调用 27 :param response: 28 :param exception: 29 :param spider: 30 :return: None,继续交给后续中间件处理异常;含 Response 或 Item 的可迭代对象(iterable),交给调度器或pipeline 31 ''' 32 return None 33 34 def process_start_requests(self, start_requests, spider): 35 ''' 36 爬虫启动时调用 37 :param start_requests: 38 :param spider: 39 :return: 包含 Request 对象的可迭代对象 40 ''' 41 print("start_requests") 42 return start_requests #最开始第一次请求,获取start_urls,执行start_requests方法

1 # Enable or disable spider middlewares 2 # See https://doc.scrapy.org/en/latest/topics/spider-middleware.html 3 SPIDER_MIDDLEWARES = { 4 'test002.middlewares.SpiderMiddleware': 100, 5 }

执行效果:

源码:

# from scrapy.core import spidermw 查看源码

1 """ 2 Spider Middleware manager 3 4 See documentation in docs/topics/spider-middleware.rst 5 """ 6 import six 7 from twisted.python.failure import Failure 8 from scrapy.middleware import MiddlewareManager 9 from scrapy.utils.defer import mustbe_deferred 10 from scrapy.utils.conf import build_component_list 11 12 def _isiterable(possible_iterator): 13 return hasattr(possible_iterator, '__iter__') 14 15 class SpiderMiddlewareManager(MiddlewareManager): 16 17 component_name = 'spider middleware' 18 19 @classmethod 20 def _get_mwlist_from_settings(cls, settings): 21 return build_component_list(settings.getwithbase('SPIDER_MIDDLEWARES')) 22 23 def _add_middleware(self, mw): 24 super(SpiderMiddlewareManager, self)._add_middleware(mw) 25 if hasattr(mw, 'process_spider_input'): 26 self.methods['process_spider_input'].append(mw.process_spider_input) 27 if hasattr(mw, 'process_spider_output'): 28 self.methods['process_spider_output'].insert(0, mw.process_spider_output) 29 if hasattr(mw, 'process_spider_exception'): 30 self.methods['process_spider_exception'].insert(0, mw.process_spider_exception) 31 if hasattr(mw, 'process_start_requests'): 32 self.methods['process_start_requests'].insert(0, mw.process_start_requests) 33 34 def scrape_response(self, scrape_func, response, request, spider): 35 fname = lambda f:'%s.%s' % ( 36 six.get_method_self(f).__class__.__name__, 37 six.get_method_function(f).__name__) 38 39 def process_spider_input(response): 40 for method in self.methods['process_spider_input']: 41 try: 42 result = method(response=response, spider=spider) 43 assert result is None, \ 44 'Middleware %s must returns None or ' \ 45 'raise an exception, got %s ' \ 46 % (fname(method), type(result)) 47 except: 48 return scrape_func(Failure(), request, spider) 49 return scrape_func(response, request, spider) 50 51 def process_spider_exception(_failure): 52 exception = _failure.value 53 for method in self.methods['process_spider_exception']: 54 result = method(response=response, exception=exception, spider=spider) 55 assert result is None or _isiterable(result), \ 56 'Middleware %s must returns None, or an iterable object, got %s ' % \ 57 (fname(method), type(result)) 58 if result is not None: 59 return result 60 return _failure 61 62 def process_spider_output(result): 63 for method in self.methods['process_spider_output']: 64 result = method(response=response, result=result, spider=spider) 65 assert _isiterable(result), \ 66 'Middleware %s must returns an iterable object, got %s ' % \ 67 (fname(method), type(result)) 68 return result 69 70 dfd = mustbe_deferred(process_spider_input, response) 71 dfd.addErrback(process_spider_exception) 72 dfd.addCallback(process_spider_output) 73 return dfd 74 75 def process_start_requests(self, start_requests, spider): 76 return self._process_chain('process_start_requests', start_requests, spider)

默认爬虫中间件:

1 内置爬虫中间件: 2 'scrapy.contrib.spidermiddleware.httperror.HttpErrorMiddleware': 50, 3 'scrapy.contrib.spidermiddleware.offsite.OffsiteMiddleware': 500, 4 'scrapy.contrib.spidermiddleware.referer.RefererMiddleware': 700, 5 'scrapy.contrib.spidermiddleware.urllength.UrlLengthMiddleware': 800, 6 'scrapy.contrib.spidermiddleware.depth.DepthMiddleware': 900,

总结: