1、 Python中torch.multiprocessing.get_start_method方法

需要导入

# 多进程编程

import torch.multiprocessing as mp

def init_dist(backend='nccl', **kwargs): """initialization for distributed training""" # spawn 启动进程 if mp.get_start_method(allow_none=True) != 'spawn': mp.set_start_method('spawn') rank = int(os.environ['RANK']) num_gpus = torch.cuda.device_count() torch.cuda.set_device(rank % num_gpus) dist.init_process_group(backend=backend, **kwargs)

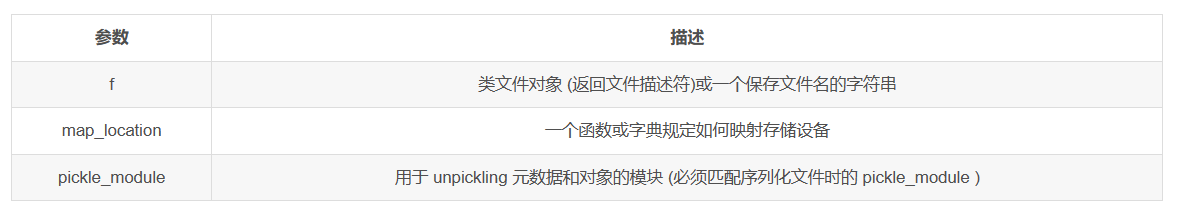

2、torch.load(f, map_location=None, pickle_module=<module 'pickle' from '...'>) 用来加载模型

大佬链接:Pytorch:模型的保存与加载 torch.save()、torch.load()、torch.nn.Module.load_state_dict()_宁静致远*的博客-CSDN博客

torch.load('tensors.pt') # Load all tensors onto the CPU torch.load('tensors.pt', map_location=torch.device('cpu')) # Load all tensors onto the CPU, using a function torch.load('tensors.pt', map_location=lambda storage, loc: storage) # Load all tensors onto GPU 1 torch.load('tensors.pt', map_location=lambda storage, loc: storage.cuda(1)) # Map tensors from GPU 1 to GPU 0 torch.load('tensors.pt', map_location={'cuda:1':'cuda:0'}) # Load tensor from io.BytesIO object with open('tensor.pt') as f: buffer = io.BytesIO(f.read()) torch.load(buffer)

2、为什么会执行到forward

大佬链接: https://zhuanlan.zhihu.com/p/366461413

3、# 使用lambda表达式,一步实现。 # 冒号左边是原函数参数; # 冒号右边是原函数返回值;

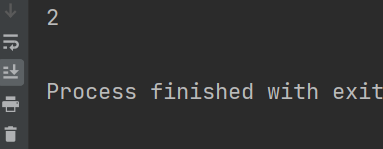

def main(): a = lambda x:x+1 print(a(1)) if __name__ == '__main__': main()

输出:

x.numel()获取tensor中一共包含多少个元素

lambda x: x.numel()

# 使用lambda表达式,一步实现。 # 冒号左边是原函数参数; # 冒号右边是原函数返回值; >>> a = lambda x:x+1 >>> a(1) 2

4、PixelShuffle层做的事情就是将输入feature map像素重组输出高分辨率的feature map,是一种上采样方法

大佬链接:https://blog.csdn.net/MR_kdcon/article/details/123967262

# PixelShuffle(2) 输入的是上采样的倍率 self.pixel_shuffle = nn.PixelShuffle(2)

5、大佬链接: https://blog.csdn.net/MR_kdcon/article/details/123967262

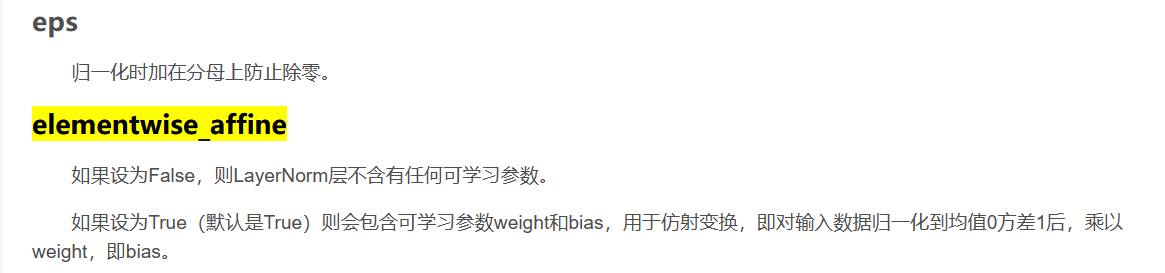

LayerNorm((1024,), eps=1e-06, elementwise_affine=True)