hive版本1.21

mysql版本5.6.24

1.解压hive

tar -zxf apache-hive-1.2.1-bin.tar.gz -C ../app/ cd ../app/ ln -s apache-hive-1.2.1-bin hive

2.安装mysql 注:参考Linux安装MySQL_5.6 - Watcher123 - 博客园 (cnblogs.com)

##解压安装包 tar -zxf mysql-5.6.24-linux-glibc2.5-x86_64.tar.gz -C ../app/ ##初始化 yum -y install autoconf ##安装初始化依赖 cd ../app ln -s mysql-5.6.24-linux-glibc2.5-x86_64 mysql ./scripts/mysql_install_db --user=hadoop --basedir=/hadoop/app/mysql --datadir=/hadoop/app/mysql/data ...... OK To start mysqld at boot time you have to copy support-files/mysql.server to the right place for your system PLEASE REMEMBER TO SET A PASSWORD FOR THE MySQL root USER ! To do so, start the server, then issue the following commands: /hadoop/app/mysql/bin/mysqladmin -u root password 'new-password' /hadoop/app/mysql/bin/mysqladmin -u root -h node1 password 'new-password' Alternatively you can run: /hadoop/app/mysql/bin/mysql_secure_installation which will also give you the option of removing the test databases and anonymous user created by default. This is strongly recommended for production servers. See the manual for more instructions. You can start the MySQL daemon with: cd . ; /hadoop/app/mysql/bin/mysqld_safe & You can test the MySQL daemon with mysql-test-run.pl cd mysql-test ; perl mysql-test-run.pl Please report any problems at http://bugs.mysql.com/ The latest information about MySQL is available on the web at http://www.mysql.com Support MySQL by buying support/licenses at http://shop.mysql.com New default config file was created as /hadoop/app/mysql/my.cnf and will be used by default by the server when you start it. You may edit this file to change server settings WARNING: Default config file /etc/my.cnf exists on the system This file will be read by default by the MySQL server If you do not want to use this, either remove it, or use the --defaults-file argument to mysqld_safe when starting the server [hadoop@node1 mysql]$ echo $? 0 ##修改配置文件 vim /etc/my.cnf [sudo] password for hadoop: [mysqld] datadir=/hadoop/app/mysql/data socket=/hadoop/app/mysql/data/mysql.sock # Disabling symbolic-links is recommended to prevent assorted security risks symbolic-links=0 # Settings user and group are ignored when systemd is used. # If you need to run mysqld under a different user or group, # customize your systemd unit file for mariadb according to the # instructions in http://fedoraproject.org/wiki/Systemd user=hadoop [mysqld_safe] log-error=/hadoop/app/mysql/data/error.log pid-file=/hadoop/app/mysql/data/mysql.pid # # include all files from the config directory # !includedir /etc/my.cnf.d ##将启动脚本添加到/etc/init.d/下 cp support-files/mysql.server /etc/init.d/mysql vim /etc/init.d/mysql basedir=/hadoop/app/mysql datadir=/hadoop/app/mysql/data ###启动mysql service mysql start Starting MySQL.. SUCCESS! ##配置环境变量 vim /etc/profile export MYSQL_HOME=/hadoop/app/mysql export PATH=$PATH:$JAVA_HOME:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$MYSQL_HOME/bin source /etc/profile ##修改mysql密码 mysqladmin -u root password '123' -S app/mysql/data/mysql.sock ##-S为指定需要连接的sock文件 ##修改允许的连接主机 mysql -uroot -p123 -S app/mysql/data/mysql.sock mysql> grant all privileges on *.* to 'root'@'%' identified by '123' with grant option; Query OK, 0 rows affected (0.00 sec) mysql> flush privileges; Query OK, 0 rows affected (0.00 sec)

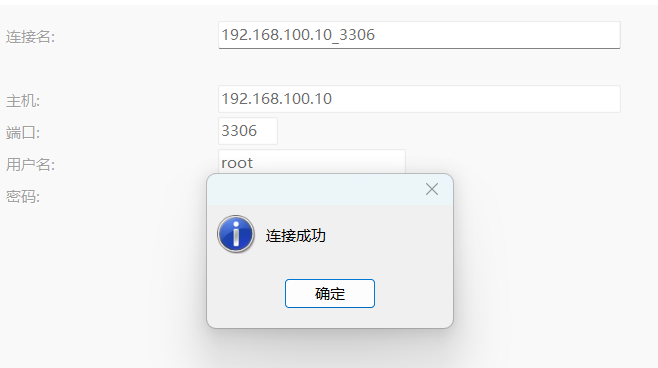

使用navicat连接测试

3.配置hive

将mysql-connector-java-5.1.27-bin.jar包放在hive/lib下

进入hive/conf

touch hive-site.xml

vim hive-site.xml

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://node1:3306/hive?createDatabaseIfNotExist=true</value>

<description>JDBC connect string for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBCmetastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>hive</value>

<description>username to use against metastoredatabase</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>123</value>

<description>password to use against metastoredatabase</description>

</property>

</configuration>

配置环境变量

vim /etc/profile

export HIVE_HOME=/hadoop/app/hive

export PATH=$PATH:$JAVA_HOME:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$MYSQL_HOME/bin:$HIVE_HOME/bin

启动hive客户端

[hadoop@node1 ~]$ hive

Logging initialized using configuration in jar:file:/hadoop/app/apache-hive-1.2.1-bin/lib/hive-common-1.2.1.jar!/hive-log4j.properties

hive>

将hive分发到其他主机

scp -r app/hive node2:/hadoop/app/hive

scp -r app/hive node3:/hadoop/app/hive