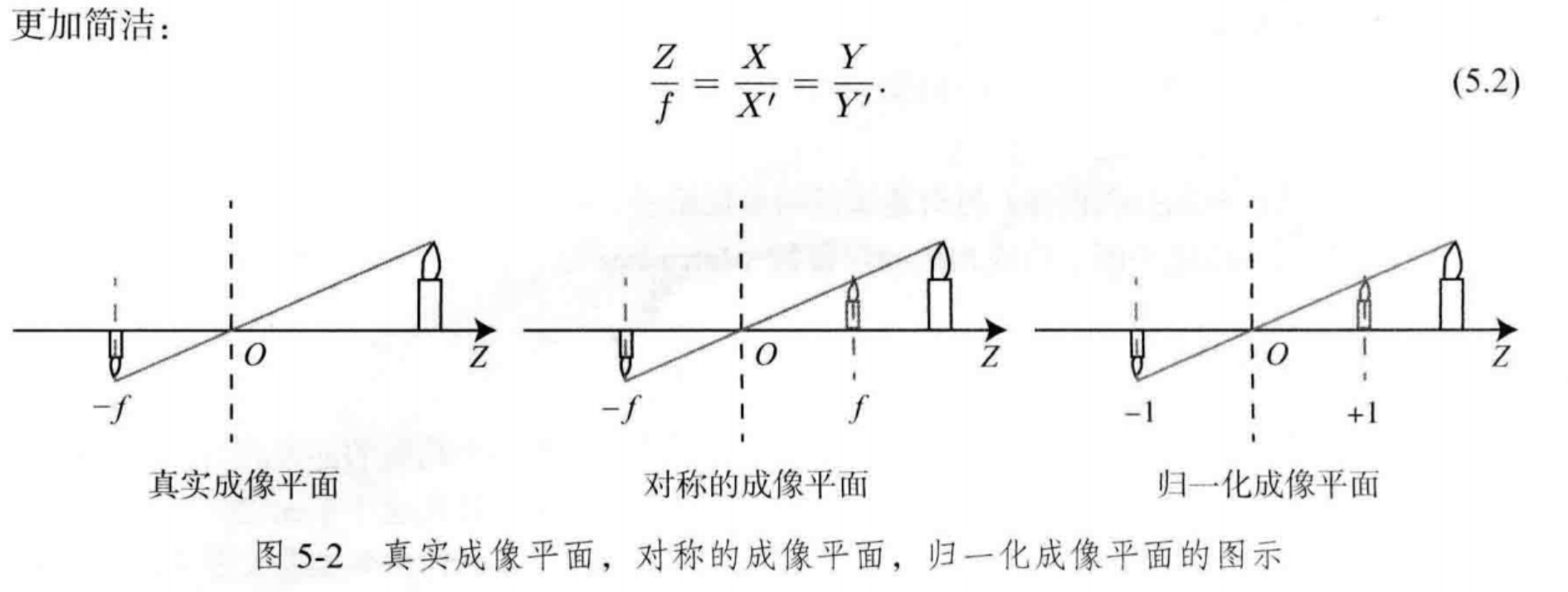

1相机成像模型

https://www.cnblogs.com/wangguchangqing/p/8126333.html

1-1 基本模型

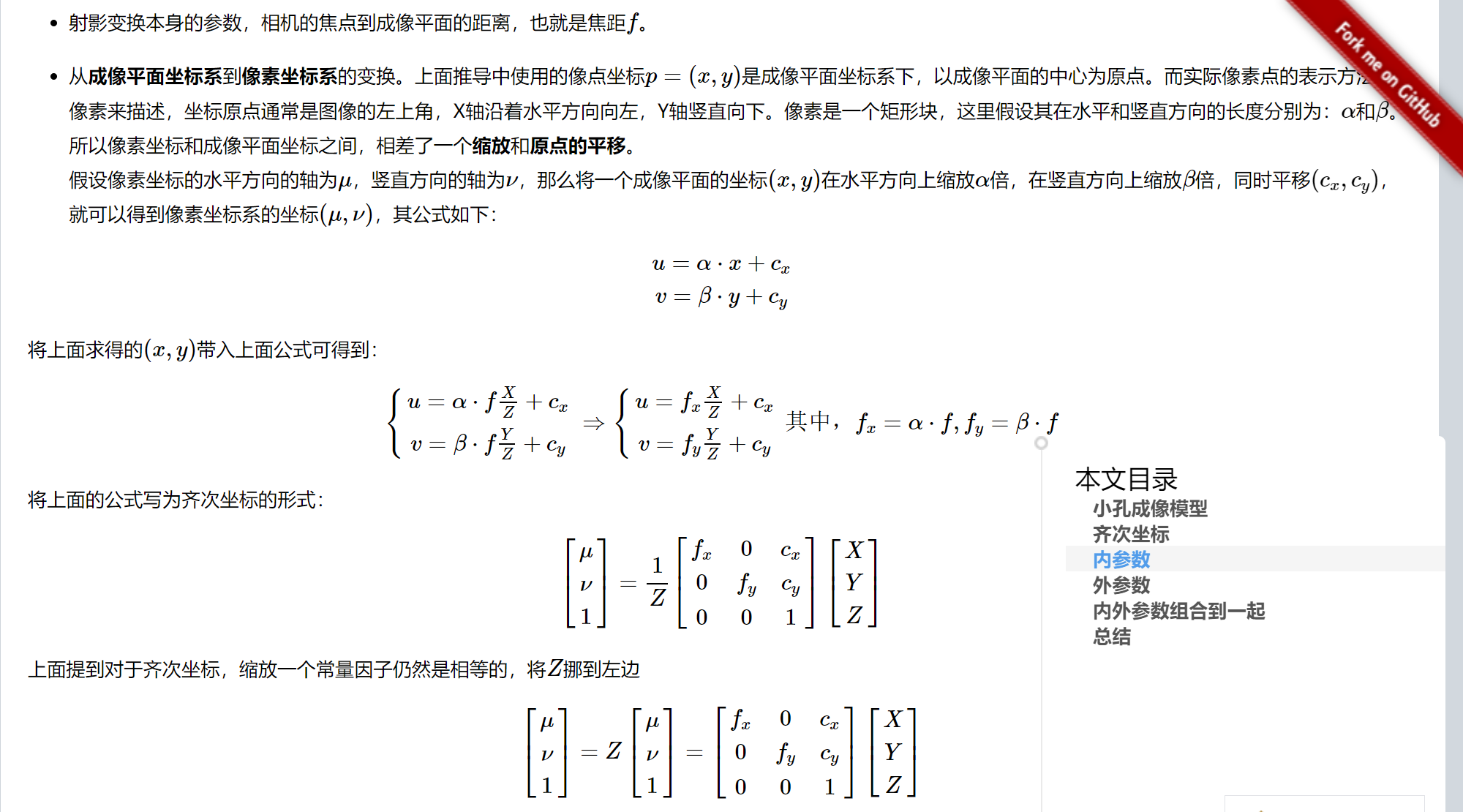

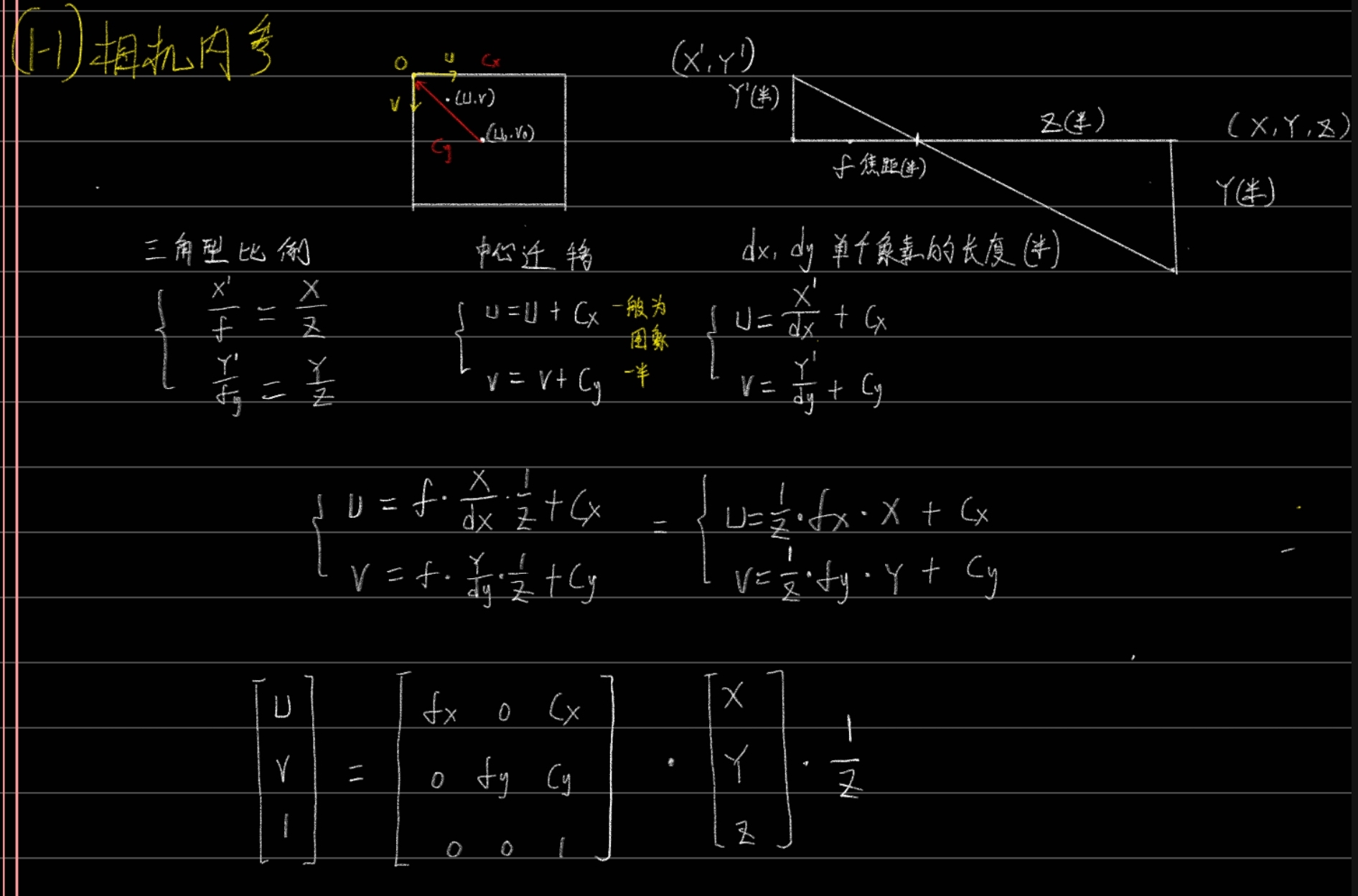

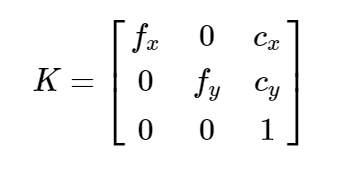

内参数矩阵(Camera Intrinsics)K,

<span id="MathJax-Element-46-Frame" class="mjx-chtml MathJax_CHTML" data-mathml="<math xmlns="http://www.w3.org/1998/Math/MathML"><msub><mi>f</mi><mi>x</mi></msub><mo>,</mo><msub><mi>f</mi><mi>y</mi></msub></math>"><span id="MJXc-Node-889" class="mjx-math"><span id="MJXc-Node-890" class="mjx-mrow"><span id="MJXc-Node-891" class="mjx-msubsup"><span class="mjx-base"><span id="MJXc-Node-892" class="mjx-mi"><span class="mjx-char MJXc-TeX-math-I">f<span class="mjx-sub"><span id="MJXc-Node-893" class="mjx-mi"><span class="mjx-char MJXc-TeX-math-I">x<span id="MJXc-Node-894" class="mjx-mo"><span class="mjx-char MJXc-TeX-main-R">,<span id="MJXc-Node-895" class="mjx-msubsup MJXc-space1"><span class="mjx-base"><span id="MJXc-Node-896" class="mjx-mi"><span class="mjx-char MJXc-TeX-math-I">f<span class="mjx-sub"><span id="MJXc-Node-897" class="mjx-mi"><span class="mjx-char MJXc-TeX-math-I">y<span class="MJX_Assistive_MathML">和相机的焦距,像素的大小有关;<span class="math inline"><span class="MathJax_Preview"><span id="MathJax-Element-47-Frame" class="mjx-chtml MathJax_CHTML" data-mathml="<math xmlns="http://www.w3.org/1998/Math/MathML"><msub><mi>c</mi><mi>x</mi></msub><mo>,</mo><msub><mi>c</mi><mi>y</mi></msub></math>"><span id="MJXc-Node-898" class="mjx-math"><span id="MJXc-Node-899" class="mjx-mrow"><span id="MJXc-Node-900" class="mjx-msubsup"><span class="mjx-base"><span id="MJXc-Node-901" class="mjx-mi"><span class="mjx-char MJXc-TeX-math-I">c<span class="mjx-sub"><span id="MJXc-Node-902" class="mjx-mi"><span class="mjx-char MJXc-TeX-math-I">x<span id="MJXc-Node-903" class="mjx-mo"><span class="mjx-char MJXc-TeX-main-R">,<span id="MJXc-Node-904" class="mjx-msubsup MJXc-space1"><span class="mjx-base"><span id="MJXc-Node-905" class="mjx-mi"><span class="mjx-char MJXc-TeX-math-I">c<span class="mjx-sub"><span id="MJXc-Node-906" class="mjx-mi"><span class="mjx-char MJXc-TeX-math-I">y<span class="MJX_Assistive_MathML">是平移的距离,和相机成像平面的大小有关。

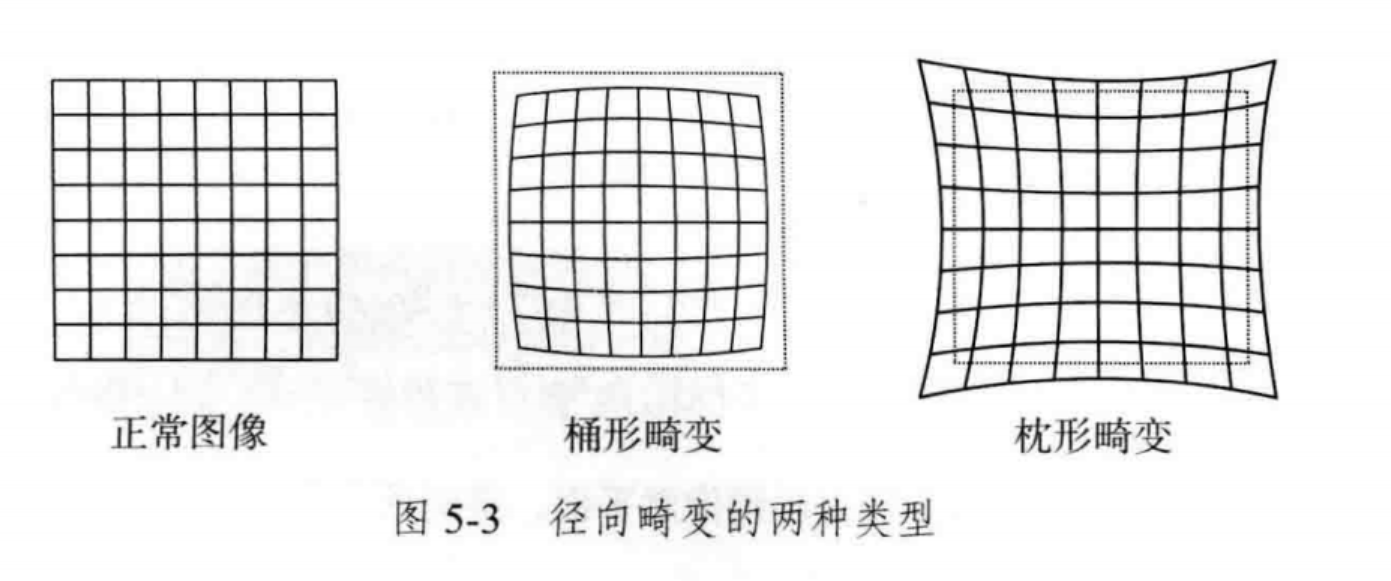

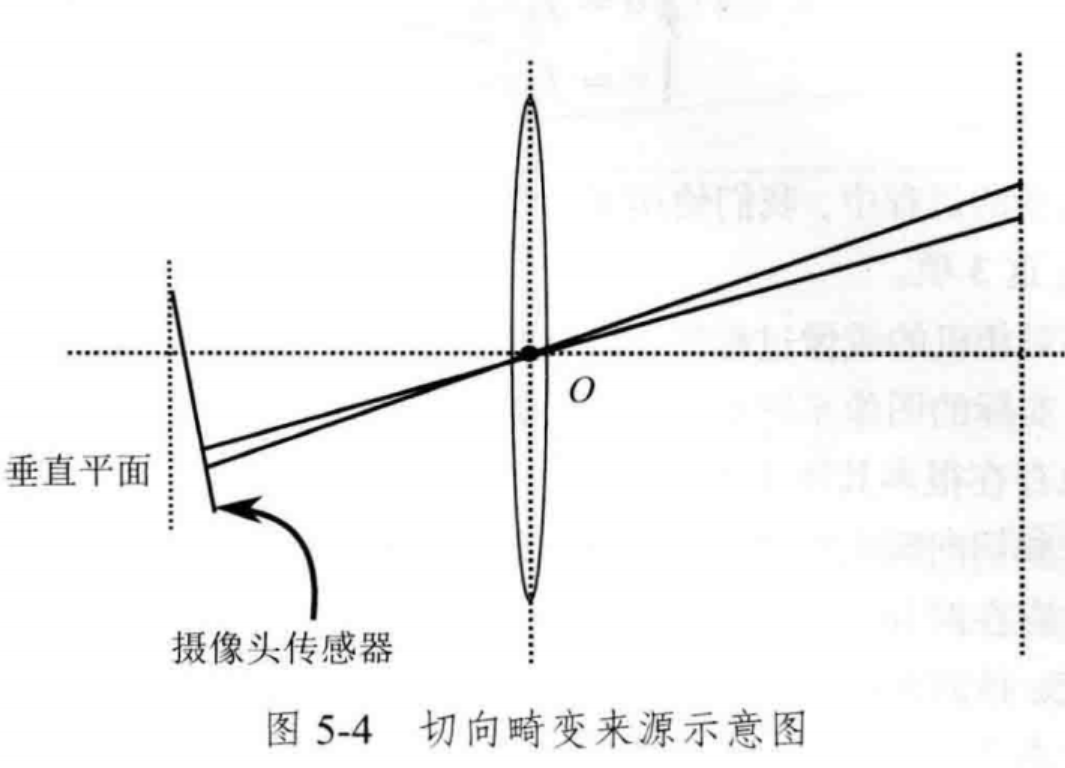

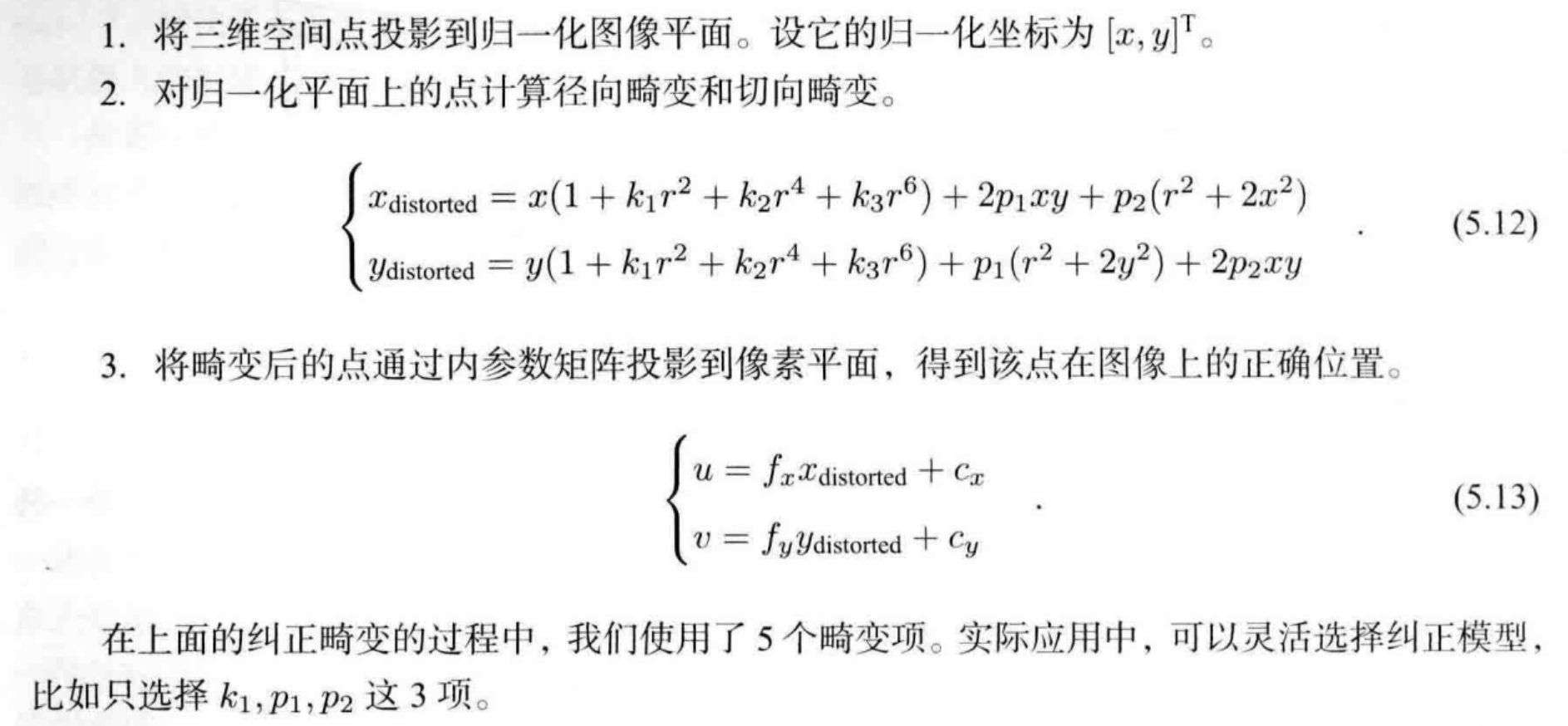

1-2 畸变矫正

1-3外参

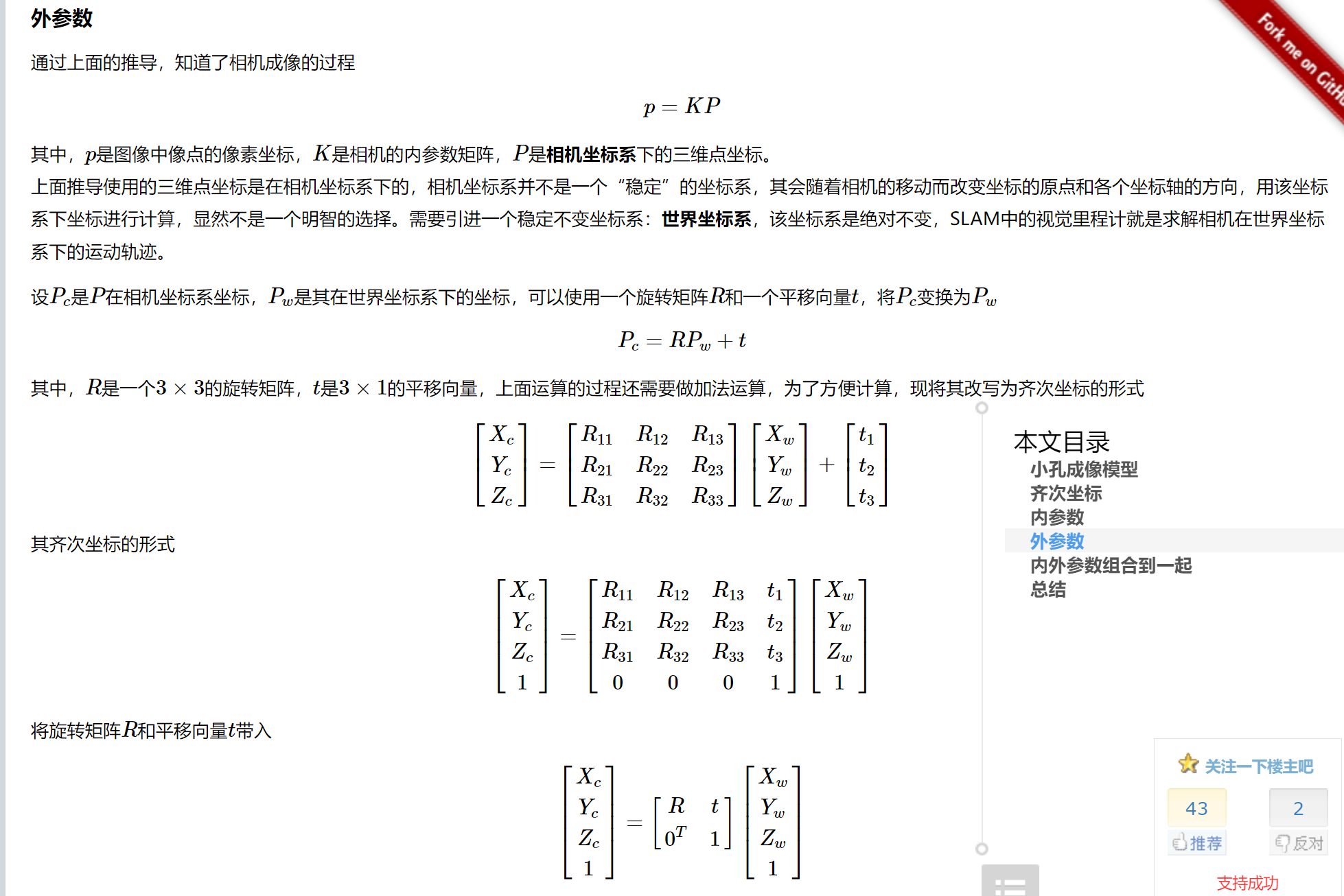

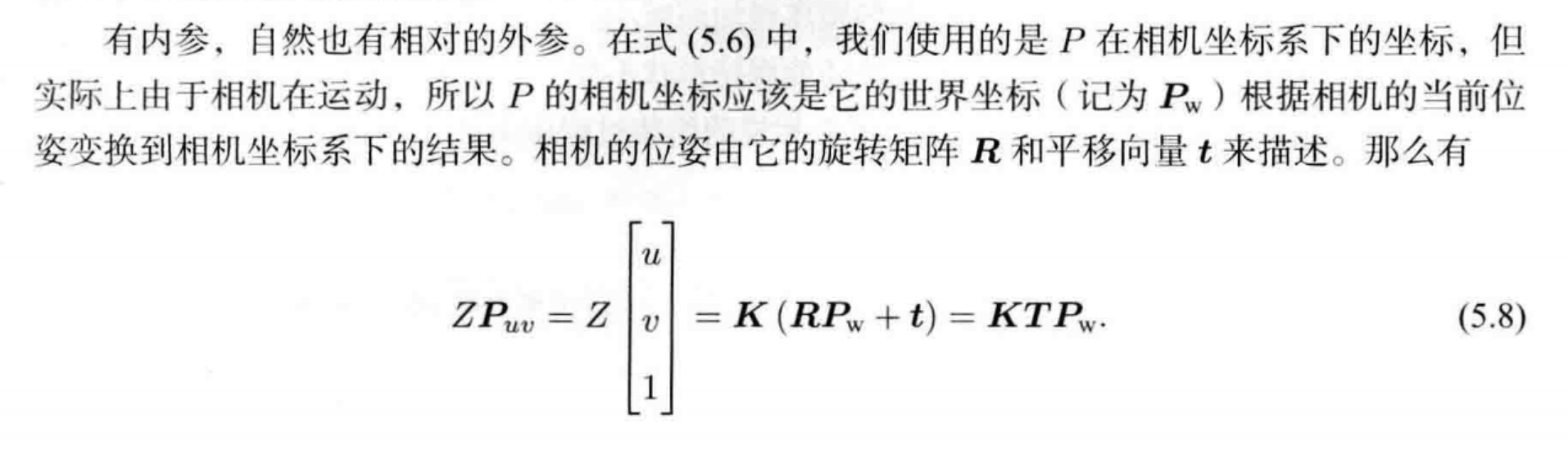

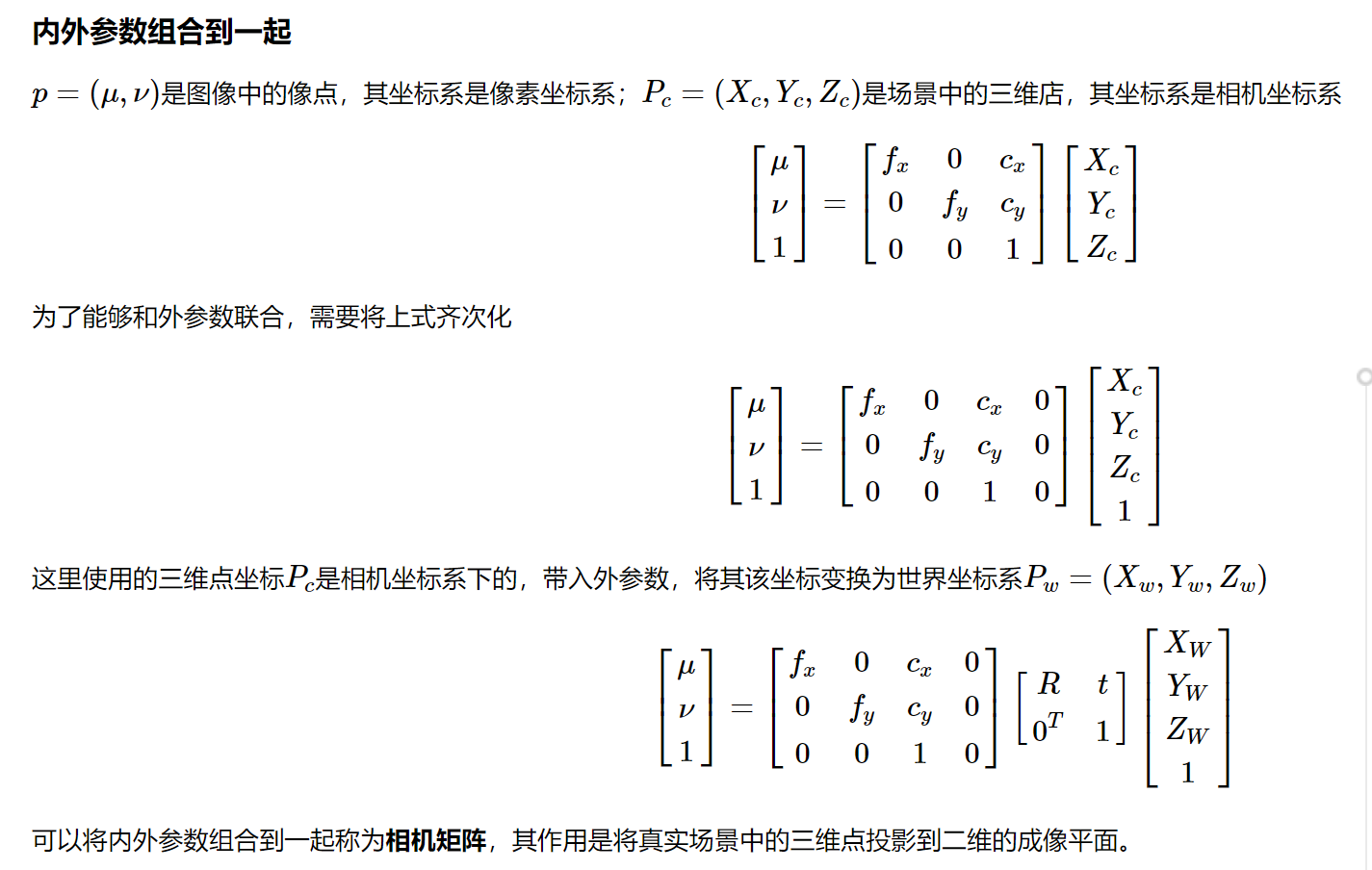

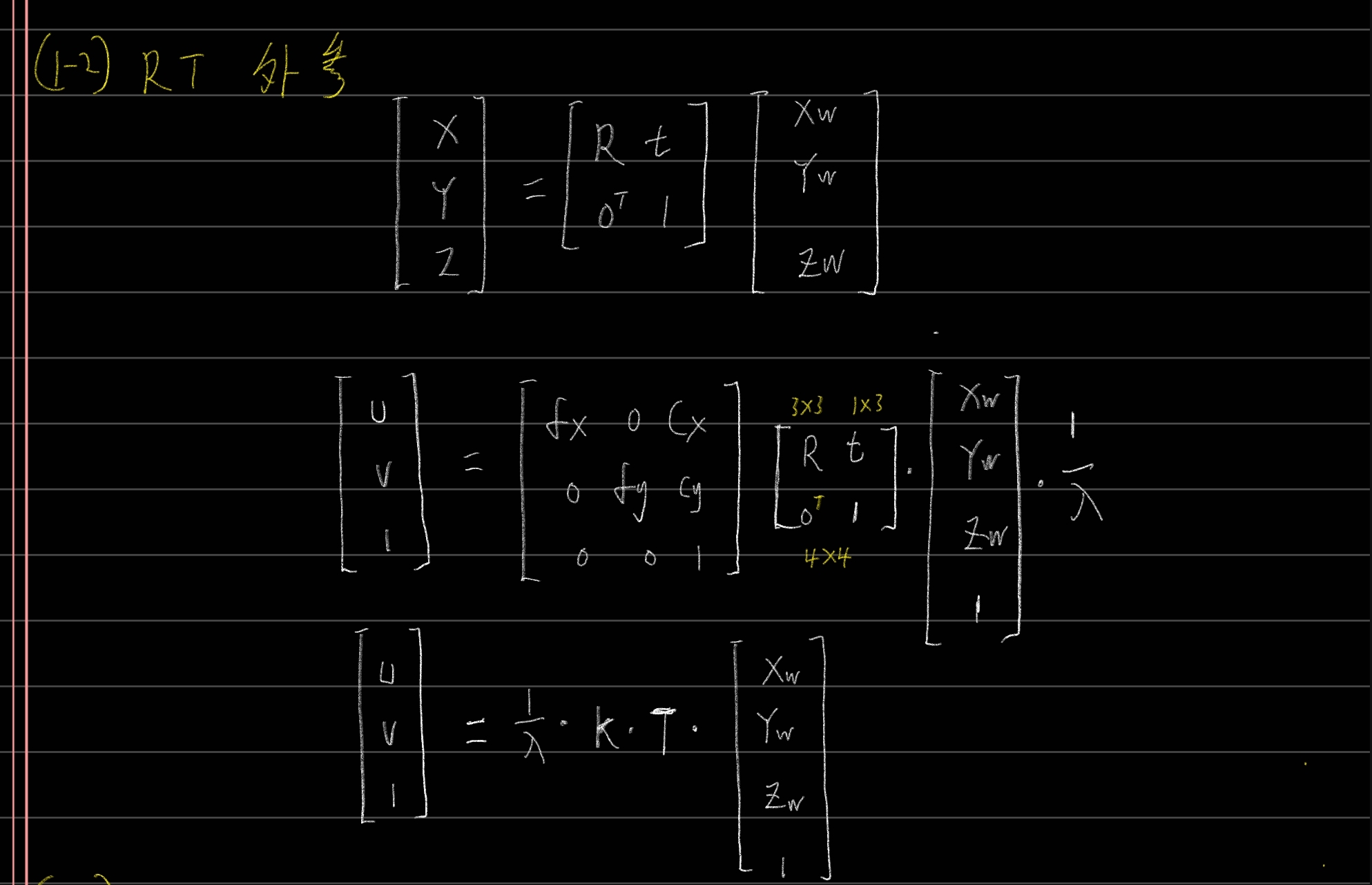

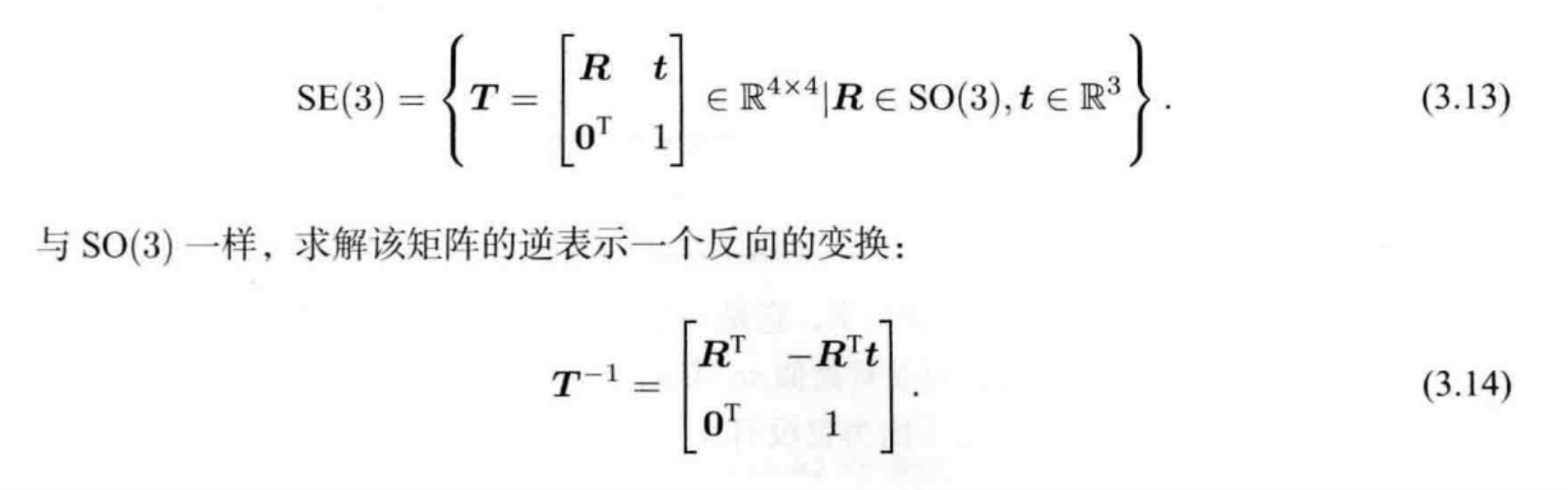

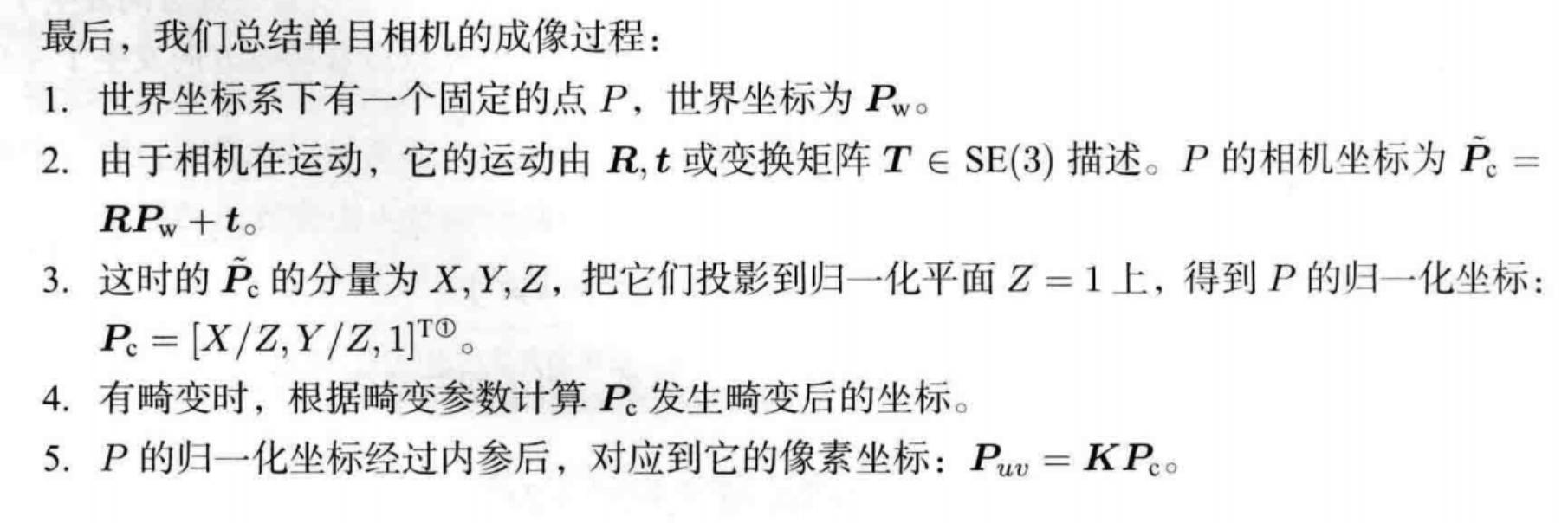

设<span class="MathJax_Preview"><span id="MathJax-Element-52-Frame" class="mjx-chtml MathJax_CHTML" data-mathml="<math xmlns="http://www.w3.org/1998/Math/MathML"><msub><mi>P</mi><mi>c</mi></msub></math>"><span id="MJXc-Node-922" class="mjx-math"><span id="MJXc-Node-923" class="mjx-mrow"><span id="MJXc-Node-924" class="mjx-msubsup"><span class="mjx-base"><span id="MJXc-Node-925" class="mjx-mi"><span class="mjx-char MJXc-TeX-math-I">P<span class="mjx-sub"><span id="MJXc-Node-926" class="mjx-mi"><span class="mjx-char MJXc-TeX-math-I">c<span class="MJX_Assistive_MathML">是<span class="math inline"><span class="MathJax_Preview"><span id="MathJax-Element-53-Frame" class="mjx-chtml MathJax_CHTML" data-mathml="<math xmlns="http://www.w3.org/1998/Math/MathML"><mi>P</mi></math>"><span id="MJXc-Node-927" class="mjx-math"><span id="MJXc-Node-928" class="mjx-mrow"><span id="MJXc-Node-929" class="mjx-mi"><span class="mjx-char MJXc-TeX-math-I">P<span class="MJX_Assistive_MathML">在相机坐标系坐标,<span class="math inline"><span class="MathJax_Preview"><span id="MathJax-Element-54-Frame" class="mjx-chtml MathJax_CHTML" data-mathml="<math xmlns="http://www.w3.org/1998/Math/MathML"><msub><mi>P</mi><mi>w</mi></msub></math>"><span id="MJXc-Node-930" class="mjx-math"><span id="MJXc-Node-931" class="mjx-mrow"><span id="MJXc-Node-932" class="mjx-msubsup"><span class="mjx-base"><span id="MJXc-Node-933" class="mjx-mi"><span class="mjx-char MJXc-TeX-math-I">P<span class="mjx-sub"><span id="MJXc-Node-934" class="mjx-mi"><span class="mjx-char MJXc-TeX-math-I">w<span class="MJX_Assistive_MathML">是其在世界坐标系下的坐标,可以使用一个旋转矩阵<span class="math inline"><span class="MathJax_Preview"><span id="MathJax-Element-55-Frame" class="mjx-chtml MathJax_CHTML" data-mathml="<math xmlns="http://www.w3.org/1998/Math/MathML"><mi>R</mi></math>"><span id="MJXc-Node-935" class="mjx-math"><span id="MJXc-Node-936" class="mjx-mrow"><span id="MJXc-Node-937" class="mjx-mi"><span class="mjx-char MJXc-TeX-math-I">R<span class="MJX_Assistive_MathML">和一个平移向量<span class="math inline"><span class="MathJax_Preview"><span id="MathJax-Element-56-Frame" class="mjx-chtml MathJax_CHTML" data-mathml="<math xmlns="http://www.w3.org/1998/Math/MathML"><mi>t</mi></math>"><span id="MJXc-Node-938" class="mjx-math"><span id="MJXc-Node-939" class="mjx-mrow"><span id="MJXc-Node-940" class="mjx-mi"><span class="mjx-char MJXc-TeX-math-I">t<span class="MJX_Assistive_MathML">,将<span class="math inline"><span class="MathJax_Preview"><span id="MathJax-Element-57-Frame" class="mjx-chtml MathJax_CHTML" data-mathml="<math xmlns="http://www.w3.org/1998/Math/MathML"><msub><mi>P</mi><mi>c</mi></msub></math>"><span id="MJXc-Node-941" class="mjx-math"><span id="MJXc-Node-942" class="mjx-mrow"><span id="MJXc-Node-943" class="mjx-msubsup"><span class="mjx-base"><span id="MJXc-Node-944" class="mjx-mi"><span class="mjx-char MJXc-TeX-math-I">P<span class="mjx-sub"><span id="MJXc-Node-945" class="mjx-mi"><span class="mjx-char MJXc-TeX-math-I">c<span class="MJX_Assistive_MathML">变换为<span class="math inline"><span class="MathJax_Preview"><span id="MathJax-Element-58-Frame" class="mjx-chtml MathJax_CHTML" data-mathml="<math xmlns="http://www.w3.org/1998/Math/MathML"><msub><mi>P</mi><mi>w</mi></msub></math>"><span id="MJXc-Node-946" class="mjx-math"><span id="MJXc-Node-947" class="mjx-mrow"><span id="MJXc-Node-948" class="mjx-msubsup"><span class="mjx-base"><span id="MJXc-Node-949" class="mjx-mi"><span class="mjx-char MJXc-TeX-math-I">P<span class="mjx-sub"><span id="MJXc-Node-950" class="mjx-mi"><span class="mjx-char MJXc-TeX-math-I">w

https://www.cnblogs.com/wangguchangqing/p/8126333.html

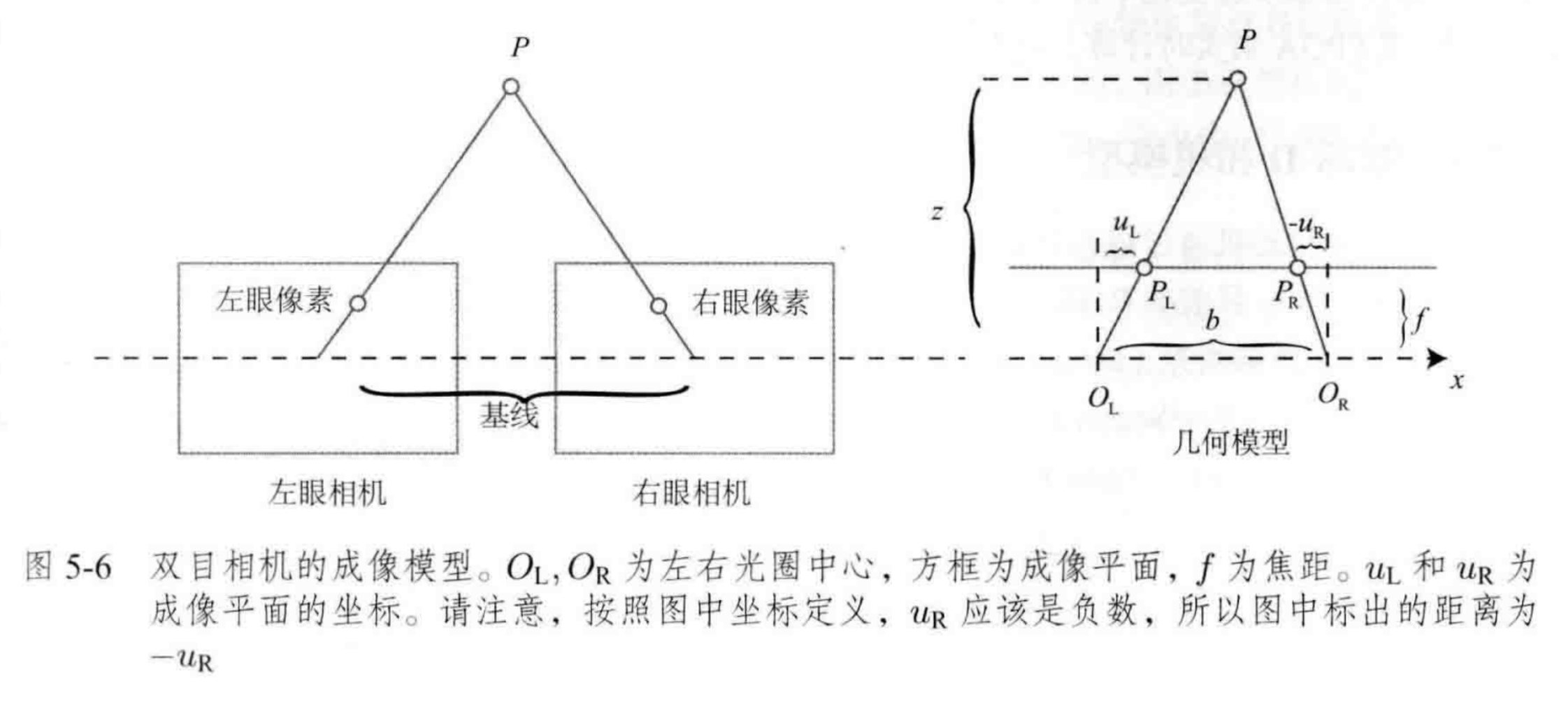

1-4 双目模型

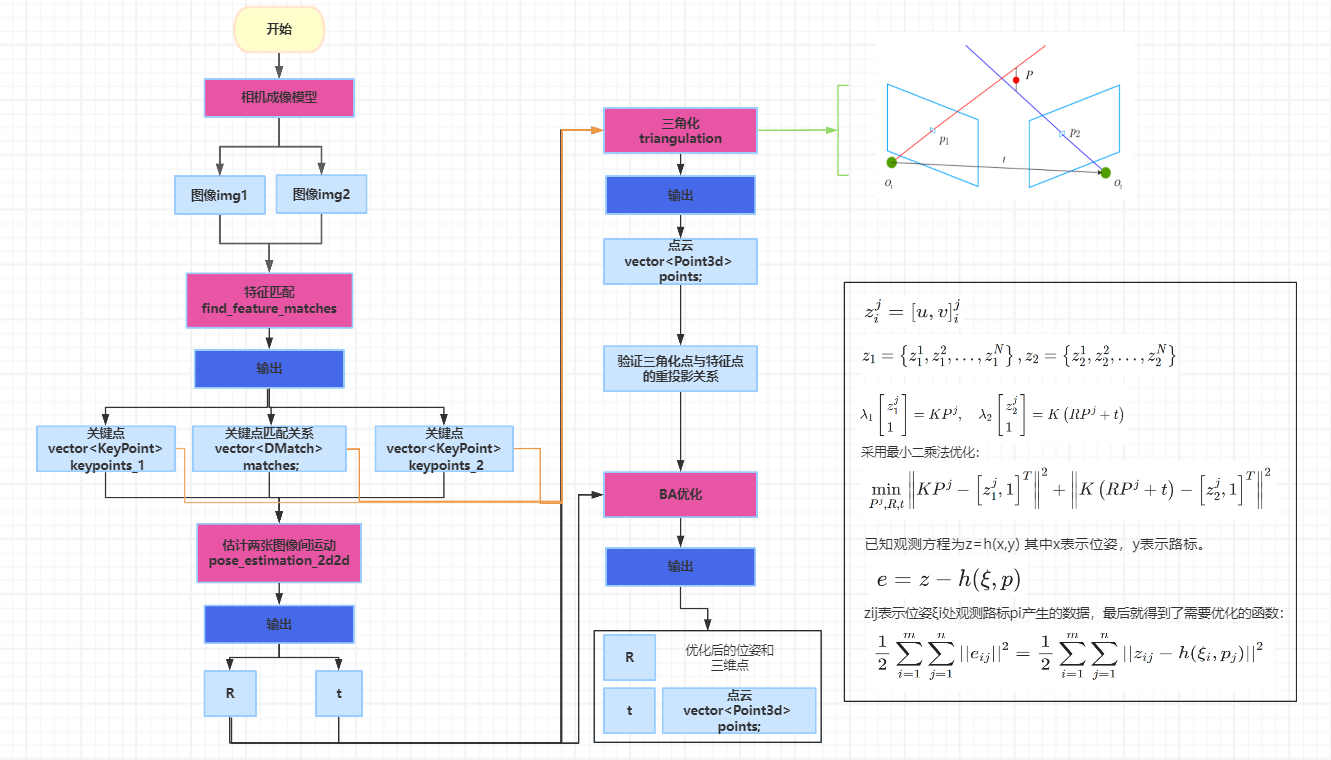

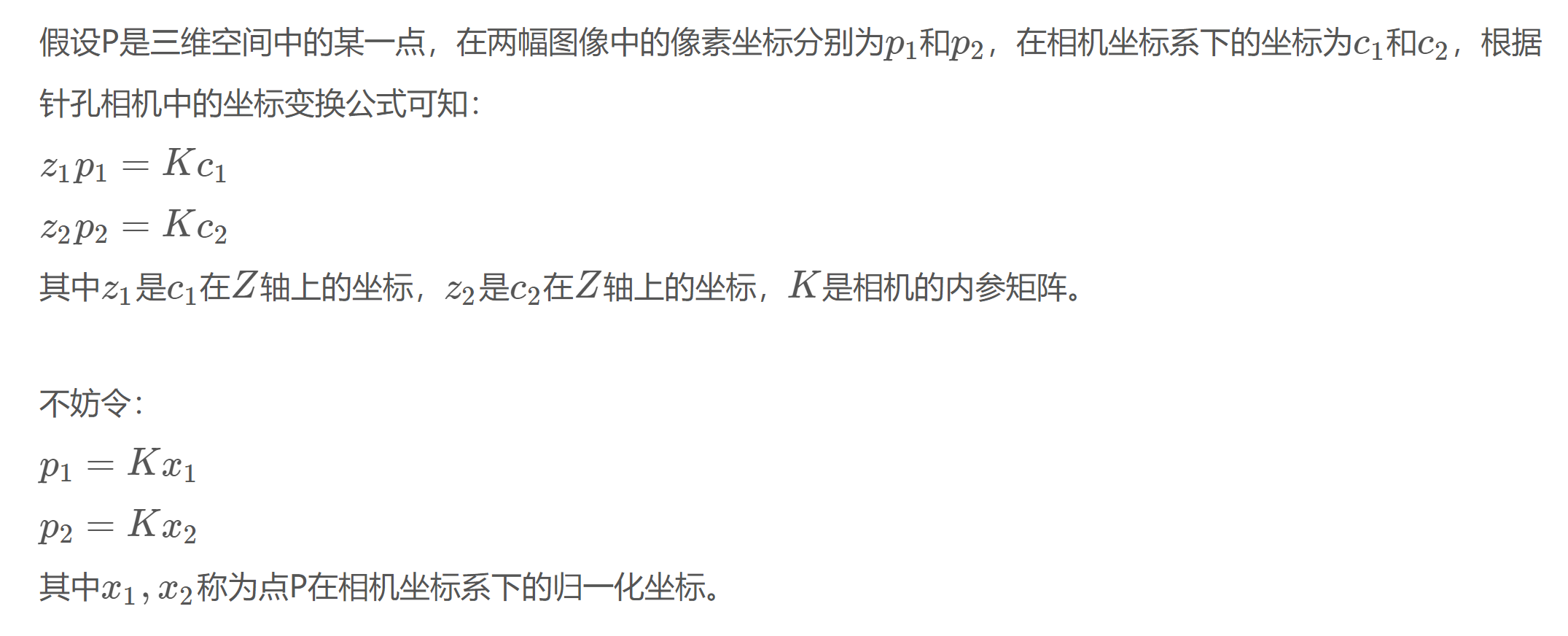

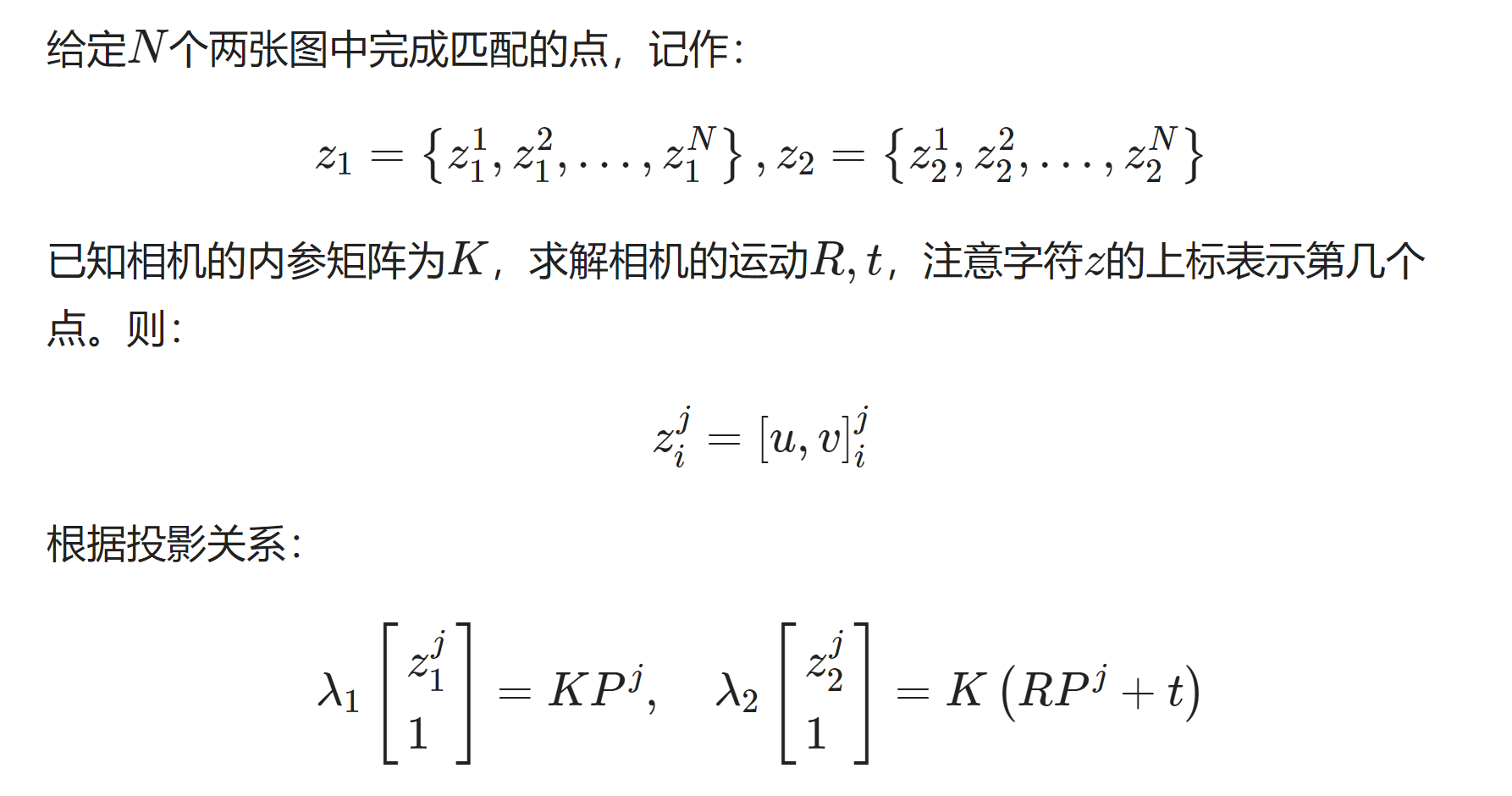

2 单目双图SVD分解求运动,E和F矩阵

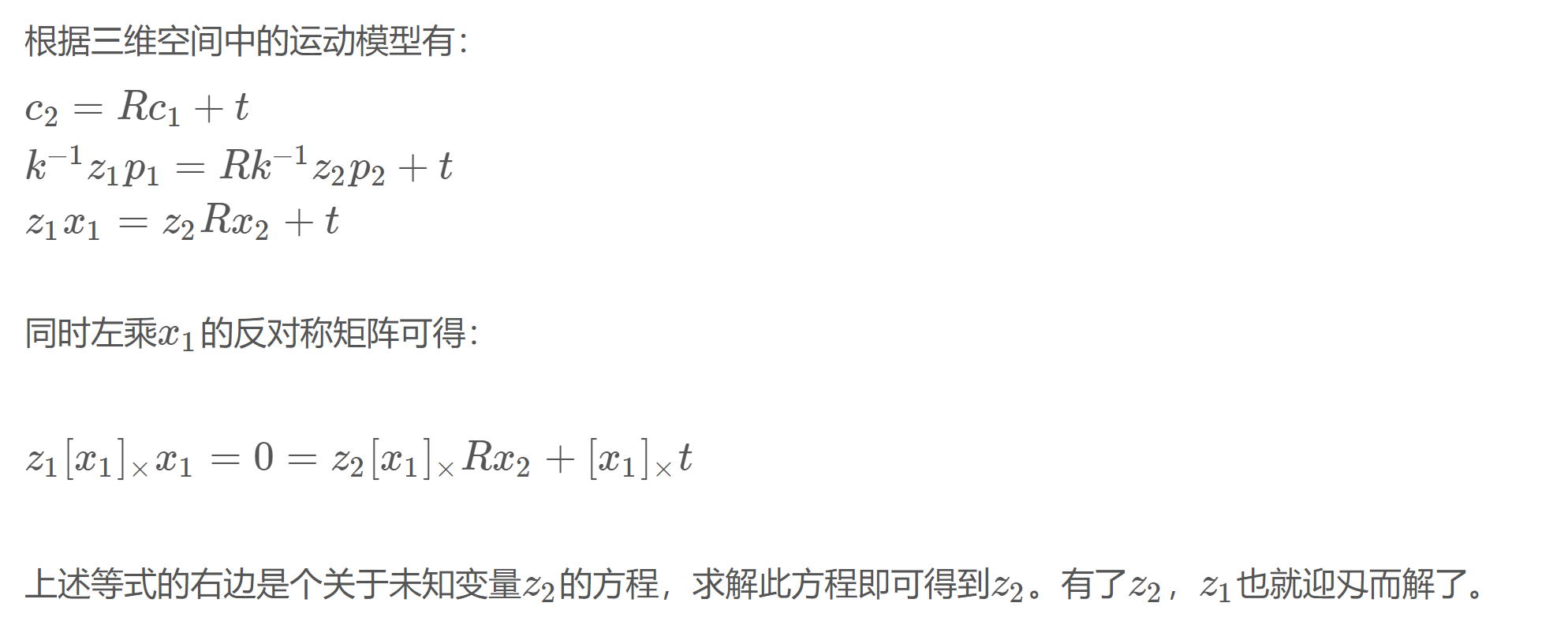

3 三角化

3-1 原理

https://zhehangt.github.io/2017/03/06/SLAM/Basic/Triangularization/

但由于误差的存在,上述方程通常不会严格成立,所以更常见的做法是求最小二乘解而不是零解。

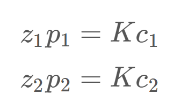

最后根据

求解出三维点坐标 c1 c2=R*c1+t

3-2 完整代码

https://cloud.tencent.com/developer/article/1526502

主函数

int main ( int argc, char** argv )

{

if ( argc != 3 )

{

cout<<"usage: triangulation img1 img2"<<endl;

return 1;

}

//-- 读取图像

Mat img_1 = imread ( argv[1], CV_LOAD_IMAGE_COLOR );

Mat img_2 = imread ( argv[2], CV_LOAD_IMAGE_COLOR );

vector<KeyPoint> keypoints_1, keypoints_2;

vector<DMatch> matches;

find_feature_matches ( img_1, img_2, keypoints_1, keypoints_2, matches );

cout<<"一共找到了"<<matches.size() <<"组匹配点"<<endl;

//-- 估计两张图像间运动

Mat R,t;

pose_estimation_2d2d ( keypoints_1, keypoints_2, matches, R, t );

//-- 三角化

vector<Point3d> points;

triangulation( keypoints_1, keypoints_2, matches, R, t, points );

//-- 验证三角化点与特征点的重投影关系

Mat K = ( Mat_<double> ( 3,3 ) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1 );

for ( int i=0; i<matches.size(); i++ )

{

Point2d pt1_cam = pixel2cam( keypoints_1[ matches[i].queryIdx ].pt, K );

Point2d pt1_cam_3d(

points[i].x/points[i].z,

points[i].y/points[i].z

);

cout<<"point in the first camera frame: "<<pt1_cam<<endl;

cout<<"point projected from 3D "<<pt1_cam_3d<<", d="<<points[i].z<<endl;

// 第二个图

Point2f pt2_cam = pixel2cam( keypoints_2[ matches[i].trainIdx ].pt, K );

Mat pt2_trans = R*( Mat_<double>(3,1) << points[i].x, points[i].y, points[i].z ) + t;

pt2_trans /= pt2_trans.at<double>(2,0);

cout<<"point in the second camera frame: "<<pt2_cam<<endl;

cout<<"point reprojected from second frame: "<<pt2_trans.t()<<endl;

cout<<endl;

}

return 0;

}

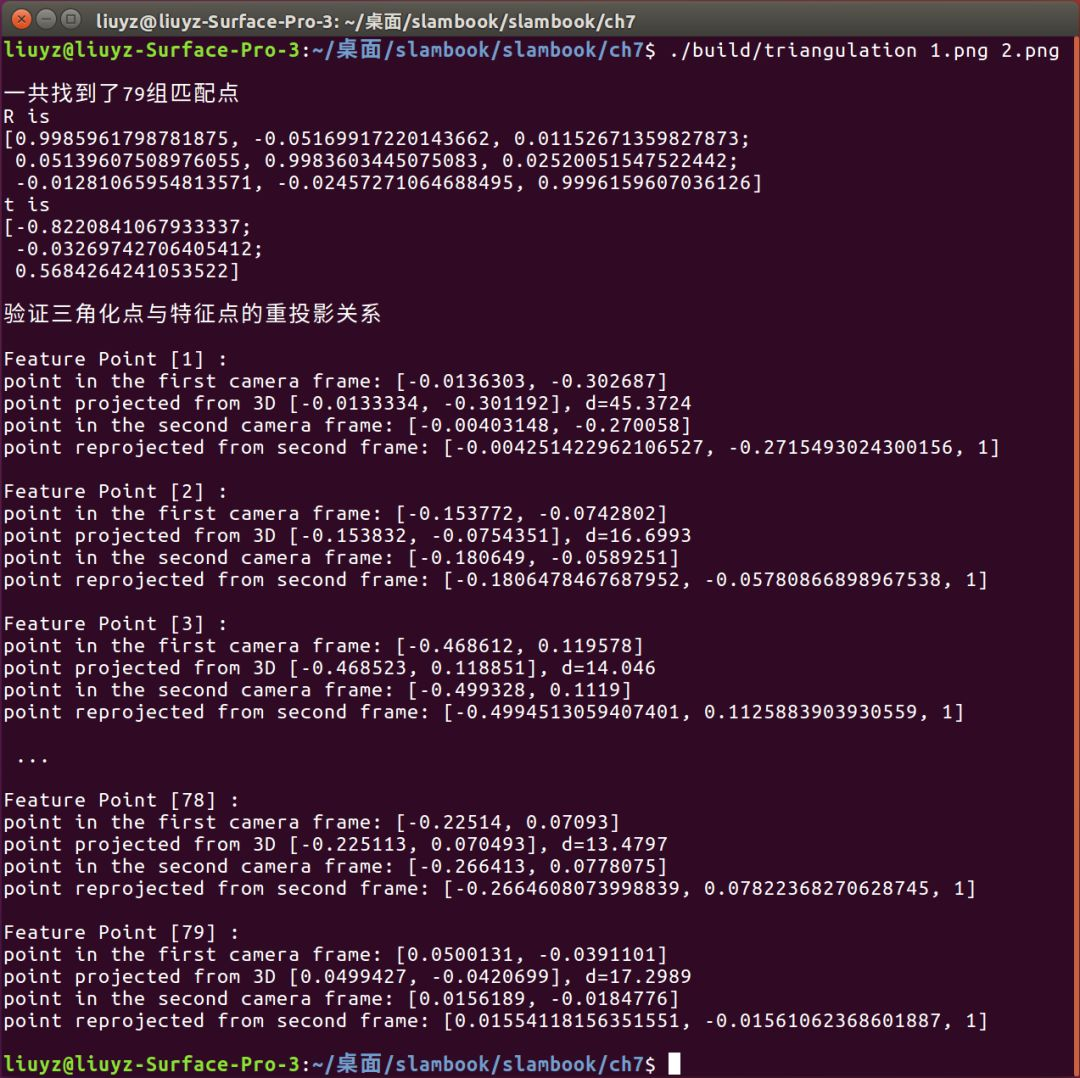

前后两帧图像对应两种不同的验证方式:

- 前一帧图像,先将特征点的2d坐标投影到归一化平面坐标,再将三角化得到的3d坐标除以其深度信息来计算其归一化坐标(这里可以看出来三角化处理得到的3d坐标是位于前一帧相机坐标系下的),并进行对比;

- 后一帧图像,同样是先将特征点的2d坐标投影到归一化平面坐标,再将前一帧相机坐标系下的3d点进行R、t位姿变换,计算出特征点在当前帧相机坐标系下的坐标,再除以其深度值来计算归一化坐标,进而进行比较。(79对特征点对共比较了79次,在结果展示时我会截取一部分。)

依赖函数

void find_feature_matches (

const Mat& img_1, const Mat& img_2,

std::vector<KeyPoint>& keypoints_1,

std::vector<KeyPoint>& keypoints_2,

std::vector< DMatch >& matches );

void pose_estimation_2d2d (

const std::vector<KeyPoint>& keypoints_1,

const std::vector<KeyPoint>& keypoints_2,

const std::vector< DMatch >& matches,

Mat& R, Mat& t );

void triangulation (

const vector<KeyPoint>& keypoint_1,

const vector<KeyPoint>& keypoint_2,

const std::vector< DMatch >& matches,

const Mat& R, const Mat& t,

vector<Point3d>& points

);

// 像素坐标转相机归一化坐标

Point2f pixel2cam( const Point2d& p, const Mat& K );

其中,find_feature_matches这个函数已经是老生常谈,功能是用来求取两帧图像中的特征点并进行特征点匹配;

pose_estimation_2d2d函数用来以2d-2d方式求取相机位姿变化(因为只有不知道特征点的3d信息时才需要三角测量进行深度值的计算);

pixel2cam函数用来将特征点的像素坐标转换成归一化平面坐标。

这里,只有triangulation函数是一个新面孔,在这里阅读以下形参信息,可以预测其功能是通过已知的特征点2d坐标与特征点配对信息,以及刚求解得到的相机位姿变化,来求取特征点的3d坐标。这里3d坐标是在前一帧还是当前帧的相机坐标系下的,咱们过会读代码可以做出确定。

函数triangulation的定义

void triangulation (

const vector< KeyPoint >& keypoint_1,

const vector< KeyPoint >& keypoint_2,

const std::vector< DMatch >& matches,

const Mat& R, const Mat& t,

vector< Point3d >& points )

{

Mat T1 = (Mat_<float> (3,4) <<

1,0,0,0,

0,1,0,0,

0,0,1,0);

Mat T2 = (Mat_<float> (3,4) <<

R.at<double>(0,0), R.at<double>(0,1), R.at<double>(0,2), t.at<double>(0,0),

R.at<double>(1,0), R.at<double>(1,1), R.at<double>(1,2), t.at<double>(1,0),

R.at<double>(2,0), R.at<double>(2,1), R.at<double>(2,2), t.at<double>(2,0)

);

Mat K = ( Mat_<double> ( 3,3 ) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1 );

vector<Point2f> pts_1, pts_2;

for ( DMatch m:matches )

{

// 将像素坐标转换至相机坐标

pts_1.push_back ( pixel2cam( keypoint_1[m.queryIdx].pt, K) );

pts_2.push_back ( pixel2cam( keypoint_2[m.trainIdx].pt, K) );

}

Mat pts_4d;

cv::triangulatePoints( T1, T2, pts_1, pts_2, pts_4d );

// 转换成非齐次坐标

for ( int i=0; i<pts_4d.cols; i++ )

{

Mat x = pts_4d.col(i);

x /= x.at<float>(3,0); // 归一化

Point3d p (

x.at<float>(0,0),

x.at<float>(1,0),

x.at<float>(2,0)

);

points.push_back( p );

}

}

首先,将平移矩阵t放到旋转矩阵R右侧,增广成3×4的变换矩阵,这里这个变换矩阵更具体来讲为projection matrix(投影矩阵);进而使用pixel2cam将两组2d特征点的像素坐标转化成归一化平面坐标;最后,调用OpenCV提供的三角化处理函数triangulatePoints:

Mat pts_4d; cv::triangulatePoints( T1, T2, pts_1, pts_2, pts_4d );

这里提供一下OpenCV中的函数声明:

void triangulatePoints( InputArray projMatr1, InputArray projMatr2, InputArray projPoints1, InputArray projPoints2, OutputArray points4D );

其中,由于以前一帧为参考,则前一帧到前一帧本身的投影矩阵projMatr1为3×3的单位阵与三维零列向量构成的增广阵;当前帧到参考帧(前一帧)的投影矩阵projMatr2为R和t的增广。该函数无返回值,但会修改并存储一个4×n的Mat类矩阵points4d(本函数中为pts_4d)。

for ( int i=0; i<pts_4d.cols; i++ )

{

Mat x = pts_4d.col(i);

x /= x.at<float>(3,0); // 归一化

Point3d p (

x.at<float>(0,0),

x.at<float>(1,0),

x.at<float>(2,0)

);

points.push_back( p );

}

最后,通过循环,逐列提取pts_4d中每个特征点经过三角化计算得到的齐次坐标,由于其具有尺度不变性,需要通过归一化处理,最后取其前三维并压入存储特征点3d坐标的容器points。我们来看一下程序运行结果(为了简洁小绿剔除了一些输出信息):

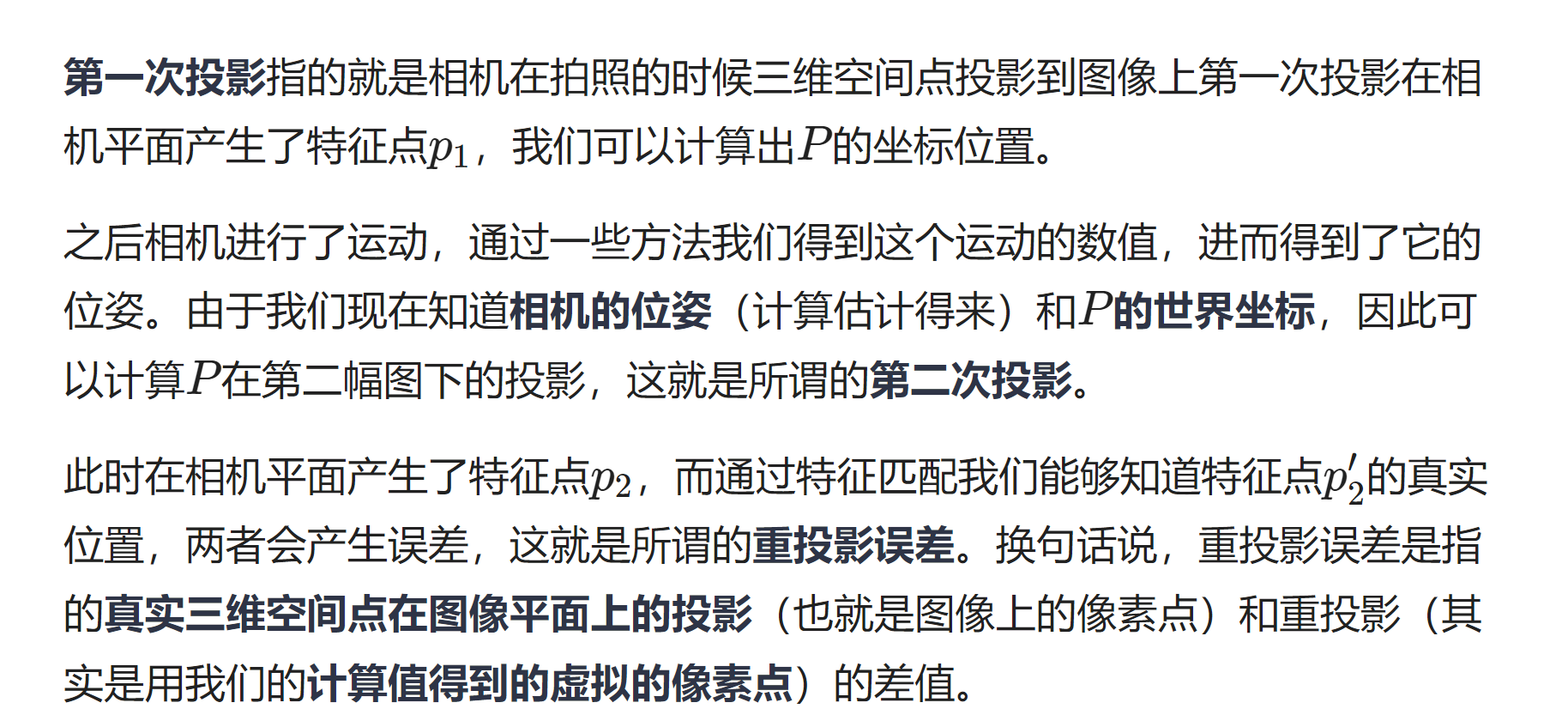

4 BA优化

https://jiangren.work/2019/08/17/%E8%AF%A6%E8%A7%A3BA/

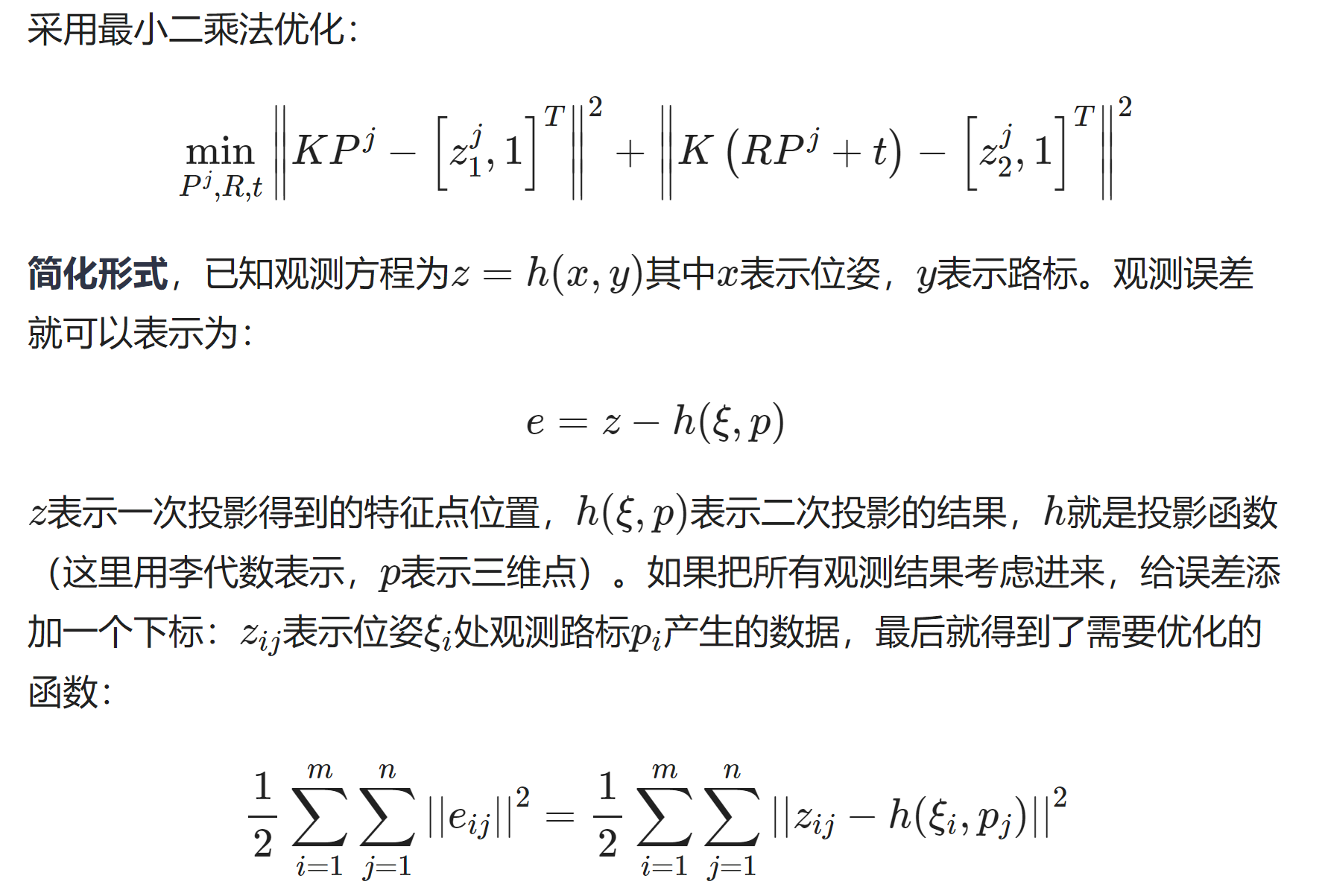

2. 求解BA

2.1 迭代求解