论文信息

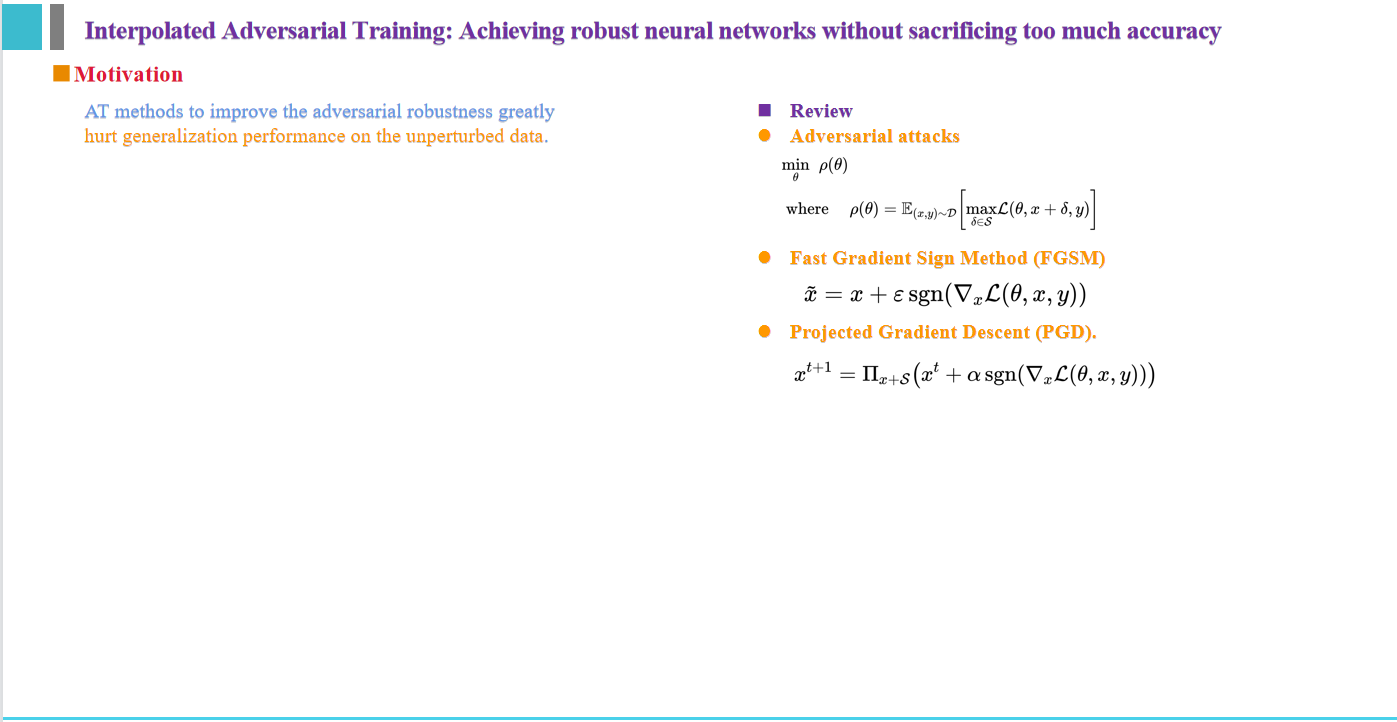

论文标题:Interpolated Adversarial Training: Achieving robust neural networks without sacrificing too much accuracy

论文作者:Alex LambVikas VermaKenji KawaguchiAlexander MatyaskoSavya KhoslaJuho KannalaYoshua Bengio

论文来源:2022 Neural Networks

论文地址:download

论文代码:download

视屏讲解:click

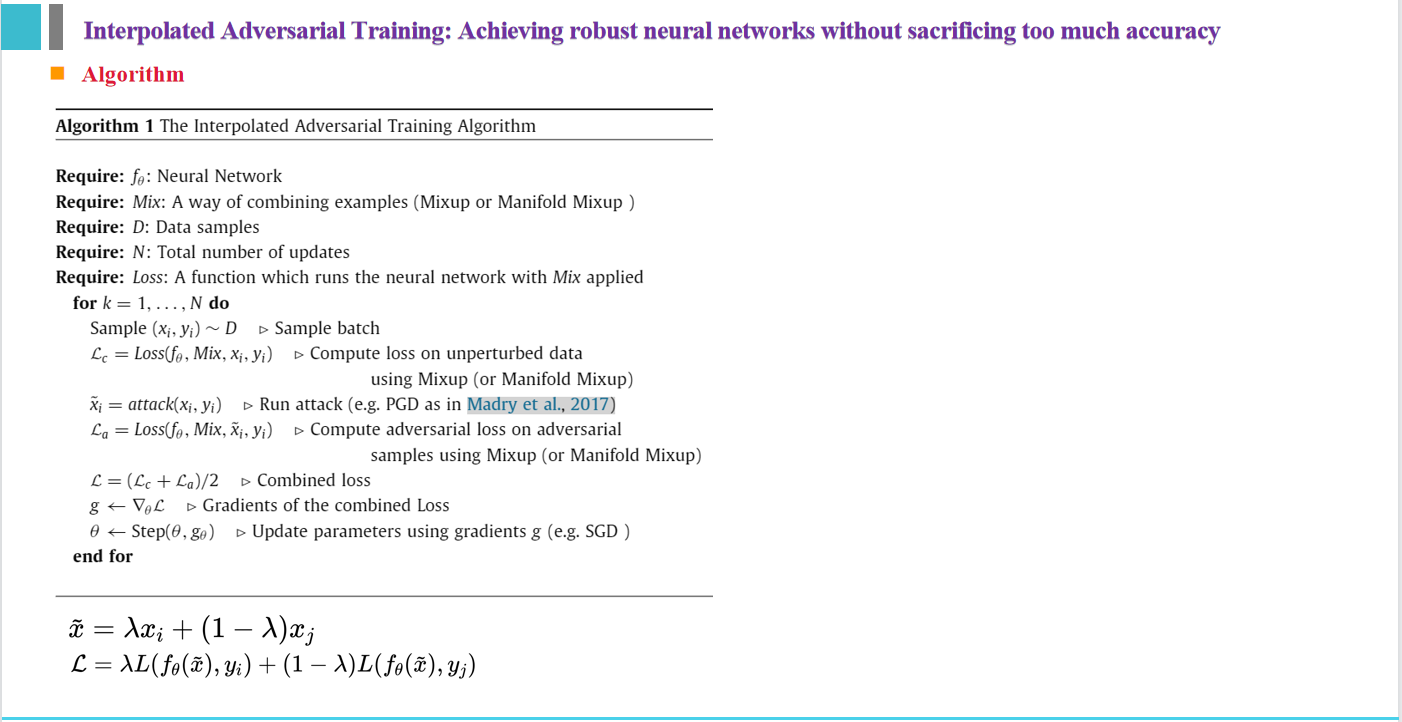

方法

代码:

import torch import torch.nn as nn import torch.optim as optim import torch.nn.functional as F import torch.backends.cudnn as cudnn import torchvision import torchvision.transforms as transforms import os import numpy as np from models import * learning_rate = 0.1 epsilon = 0.0314 k = 7 alpha = 0.00784 file_name = 'interpolated_adversarial_training' mixup_alpha = 1.0 device = 'cuda:2' transform_train = transforms.Compose([ transforms.RandomCrop(32, padding=4), transforms.RandomHorizontalFlip(), transforms.ToTensor(), ]) transform_test = transforms.Compose([ transforms.ToTensor(), ]) train_dataset = torchvision.datasets.CIFAR10(root='./data', train=True, download=True, transform=transform_train) train_loader = torch.utils.data.DataLoader(train_dataset, batch_size=128, shuffle=True, num_workers=4) def mixup_data(x, y): lam = np.random.beta(mixup_alpha, mixup_alpha) batch_size = x.size()[0] index = torch.randperm(batch_size).cuda() mixed_x = lam * x + (1 - lam) * x[index, :] y_a, y_b = y, y[index] return mixed_x, y_a, y_b, lam def mixup_criterion(criterion, pred, y_a, y_b, lam): return lam * criterion(pred, y_a) + (1 - lam) * criterion(pred, y_b) class LinfPGDAttack(object): def __init__(self, model): self.model = model def perturb(self, x_natural, y): x = x_natural.detach() x = x + torch.zeros_like(x).uniform_(-epsilon, epsilon) for i in range(k): x.requires_grad_() with torch.enable_grad(): logits = self.model(x) loss = F.cross_entropy(logits, y) grad = torch.autograd.grad(loss, [x])[0] x = x.detach() + alpha * torch.sign(grad.detach()) x = torch.min(torch.max(x, x_natural - epsilon), x_natural + epsilon) x = torch.clamp(x, 0, 1) return x net = ResNet18() net = net.to(device) adversary = LinfPGDAttack(net) criterion = nn.CrossEntropyLoss() optimizer = optim.SGD(net.parameters(), lr=learning_rate, momentum=0.9, weight_decay=0.0002) def train(epoch): net.train() benign_loss = 0 adv_loss = 0 for batch_idx, (inputs, targets) in enumerate(train_loader): inputs, targets = inputs.to(device), targets.to(device) optimizer.zero_grad() mix_input, targets_a, targets_b, lambdas = mixup_data(inputs, targets) benign_outputs = net(mix_input) loss1 = mixup_criterion(criterion, benign_outputs, targets_a, targets_b, lambdas) adv = adversary.perturb(inputs, targets) adv_inputs, adv_targets_a, adv_targets_b, adv_lam = mixup_data(adv, targets) adv_outputs = net(adv_inputs) loss2 = mixup_criterion(criterion, adv_outputs, adv_targets_a, adv_targets_b, adv_lam) loss = (loss1 + loss2) / 2 loss.backward() optimizer.step() for epoch in range(0, 200): train(epoch)

- Interpolated Adversarial sacrificing Achieving Traininginterpolated adversarial sacrificing achieving training zero achieving论文 sacrificing interpolated achieving stability-plasticity plasticity achieving auxiliary adversarial contrastive-adversarial class-imbalanced unsupervised adversarial adaptation contrastive-adversarial contrastive adversarial