本文分别利用全连接层/GCN层实现对2708篇论分进行7分类的任务,通过对比知:利用全连接层的准确率为59%,利用GCN层的准确率为81%

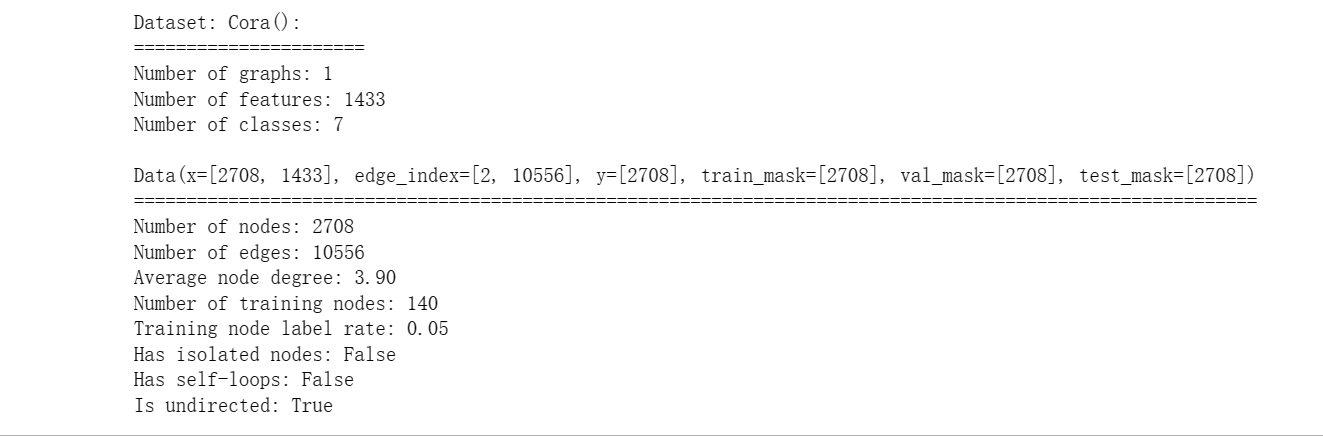

(1)数据预处理

from torch_geometric.datasets import Planetoid#下载数据集

from torch_geometric.transforms import NormalizeFeatures

dataset = Planetoid(root='data/Planetoid', name='Cora', transform=NormalizeFeatures())#transform预处理

print()

print(f'Dataset: {dataset}:')

print('======================')

print(f'Number of graphs: {len(dataset)}')#1个大图

print(f'Number of features: {dataset.num_features}')#每一篇论文为1433维向量

print(f'Number of classes: {dataset.num_classes}')#最终做一个7分类

data = dataset[0] # Get the first graph object.

#Data(x=[2708, 1433], edge_index=[2, 10556], y=[2708], train_mask=[2708], val_mask=[2708], test_mask=[2708])

#2708篇论文,每一篇论文为1433维向量,2:2维,起点-终点,10556:边的数量

print()

print(data)

print('===========================================================================================================')

# Gather some statistics about the graph.

print(f'Number of nodes: {data.num_nodes}')

print(f'Number of edges: {data.num_edges}')

print(f'Average node degree: {data.num_edges / data.num_nodes:.2f}')

print(f'Number of training nodes: {data.train_mask.sum()}')

print(f'Training node label rate: {int(data.train_mask.sum()) / data.num_nodes:.2f}')

print(f'Has isolated nodes: {data.has_isolated_nodes()}')

print(f'Has self-loops: {data.has_self_loops()}')

print(f'Is undirected: {data.is_undirected()}')

结果

(2)全连接层

import torch

from torch.nn import Linear

import torch.nn.functional as F

class MLP(torch.nn.Module):

def __init__(self, hidden_channels):

super().__init__()

torch.manual_seed(12345)

self.lin1 = Linear(dataset.num_features, hidden_channels)#全连接层,1433,16

self.lin2 = Linear(hidden_channels, dataset.num_classes)#全连接层,16,7

def forward(self, x):

x = self.lin1(x)

x = x.relu()

x = F.dropout(x, p=0.5, training=self.training)

x = self.lin2(x)

return x

model = MLP(hidden_channels=16)

model = MLP(hidden_channels=16)#获取模型

criterion = torch.nn.CrossEntropyLoss() # 损失函数Define loss criterion.

optimizer = torch.optim.Adam(model.parameters(), lr=0.01, weight_decay=5e-4) # 优化器Define optimizer.

def train():#训练模型

model.train()

optimizer.zero_grad() # 梯度清0Clear gradients.

out = model(data.x) # 通过前向传播得到输出值Perform a single forward pass.

loss = criterion(out[data.train_mask], data.y[data.train_mask]) # 用输出值和标签预测损失值,只考虑有标签的值Compute the loss solely based on the training nodes.

loss.backward() # 反向传播Derive gradients.

optimizer.step() # 梯度更新Update parameters based on gradients.

return loss

def test():#测试

model.eval()

out = model(data.x)

pred = out.argmax(dim=1) # Use the class with highest probability.

test_correct = pred[data.test_mask] == data.y[data.test_mask] # Check against ground-truth labels.

test_acc = int(test_correct.sum()) / int(data.test_mask.sum()) # 计算准确率,属于哪个类别的概率最大Derive ratio of correct predictions.

return test_acc

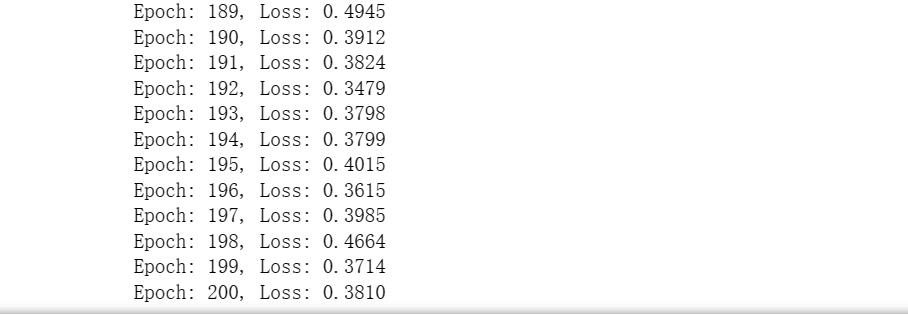

for epoch in range(1, 201):

loss = train()

print(f'Epoch: {epoch:03d}, Loss: {loss:.4f}')

结果:

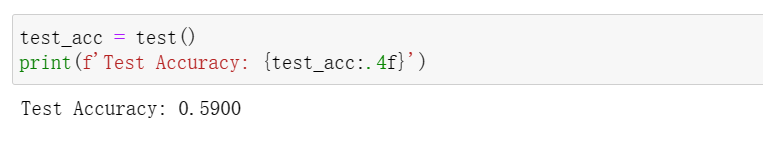

准确率为:

(3)将全连接层替换成GCN层

from torch_geometric.nn import GCNConv

class GCN(torch.nn.Module):

def __init__(self, hidden_channels):

super().__init__()

torch.manual_seed(1234567)

self.conv1 = GCNConv(dataset.num_features, hidden_channels)#GCN层,1433,16

self.conv2 = GCNConv(hidden_channels, dataset.num_classes)#GCN层,16,7

def forward(self, x, edge_index):

x = self.conv1(x, edge_index)#输入点和邻阶矩阵

x = x.relu()

x = F.dropout(x, p=0.5, training=self.training)

x = self.conv2(x, edge_index)#传入更新后的点的特征和邻阶矩阵

return x

model = GCN(hidden_channels=16)

训练GCN模型,代码与MLP同

model = GCN(hidden_channels=16)

optimizer = torch.optim.Adam(model.parameters(), lr=0.01, weight_decay=5e-4)

criterion = torch.nn.CrossEntropyLoss()

def train():

model.train()

optimizer.zero_grad()

out = model(data.x, data.edge_index)

loss = criterion(out[data.train_mask], data.y[data.train_mask])

loss.backward()

optimizer.step()

return loss

def test():

model.eval()

out = model(data.x, data.edge_index)

pred = out.argmax(dim=1)

test_correct = pred[data.test_mask] == data.y[data.test_mask]

test_acc = int(test_correct.sum()) / int(data.test_mask.sum())

return test_acc

for epoch in range(1, 101):

loss = train()

print(f'Epoch: {epoch:03d}, Loss: {loss:.4f}')

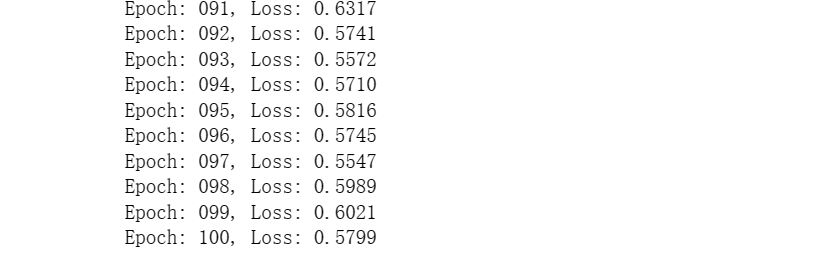

结果

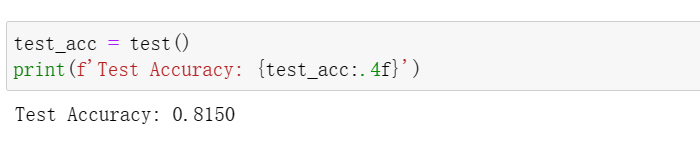

准确率为:

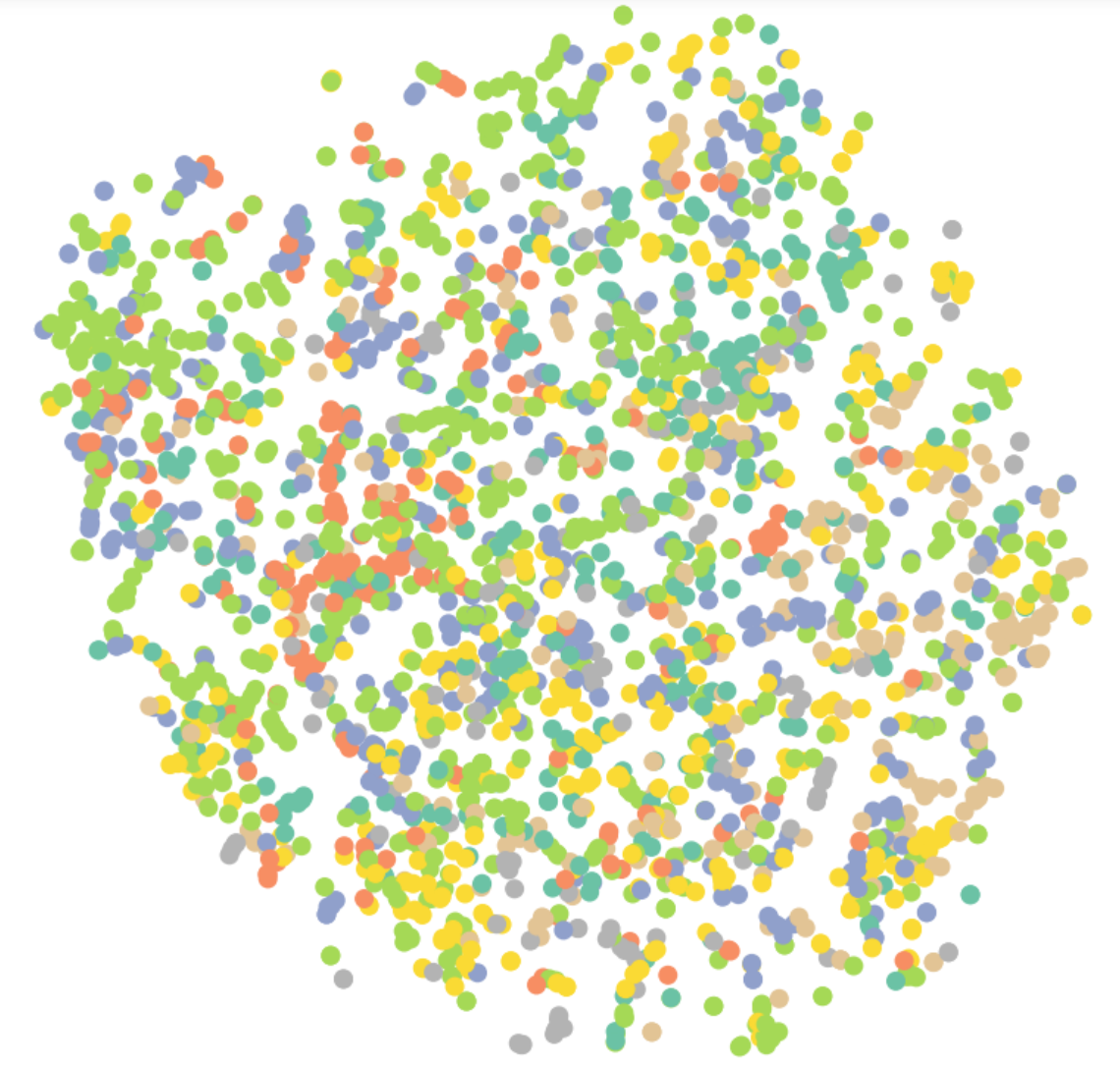

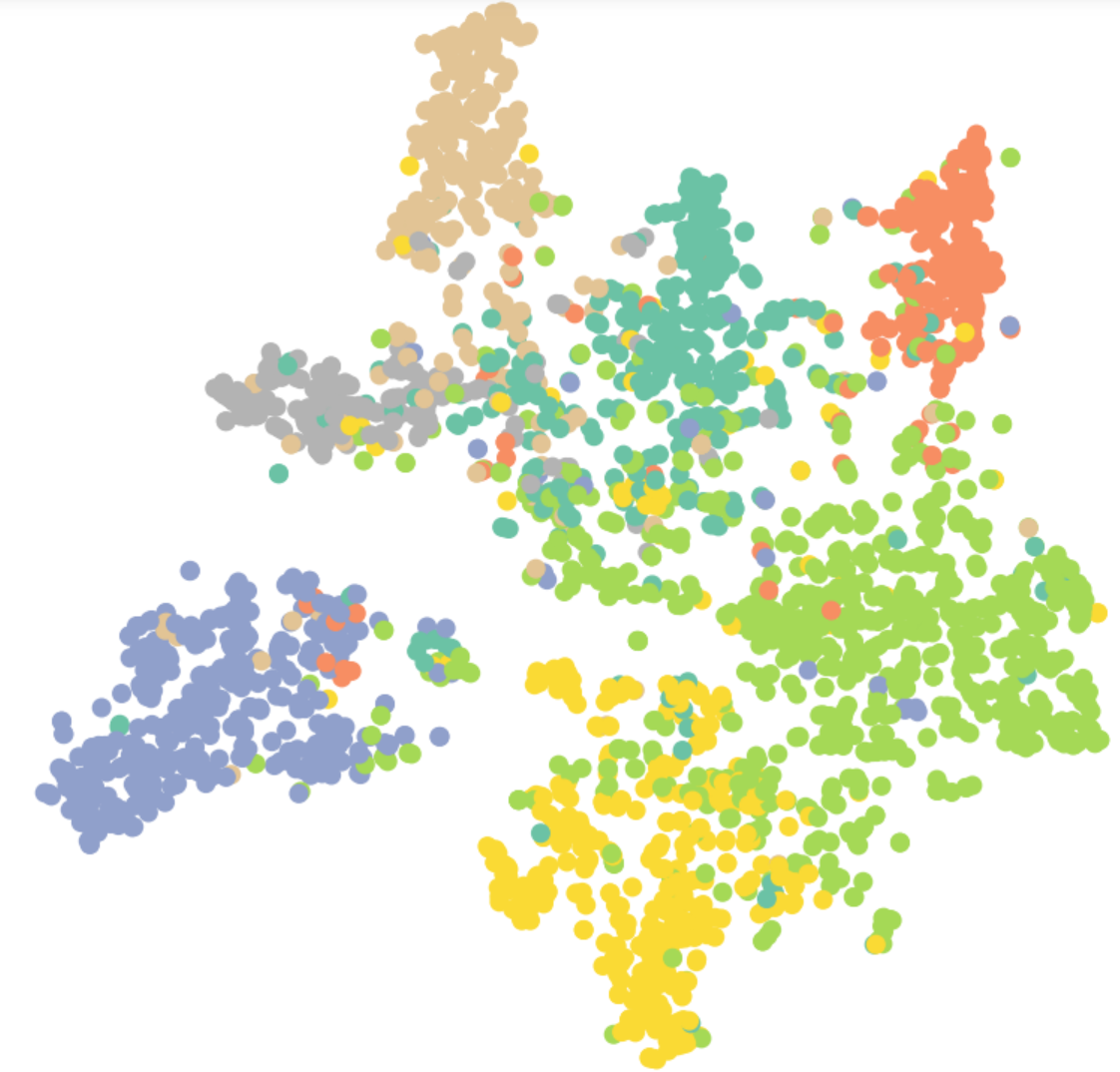

(4)可视化展示

%matplotlib inline

import matplotlib.pyplot as plt

from sklearn.manifold import TSNE#降维的包

def visualize(h, color):

z = TSNE(n_components=2).fit_transform(h.detach().cpu().numpy())

plt.figure(figsize=(10,10))

plt.xticks([])

plt.yticks([])

plt.scatter(z[:, 0], z[:, 1], s=70, c=color, cmap="Set2")

plt.show()

#原始数据展示

model = GCN(hidden_channels=16)

model.eval()

out = model(data.x, data.edge_index)

visualize(out, color=data.y)

#分类结果展示

model.eval()

out = model(data.x, data.edge_index)

visualize(out, color=data.y)