摘要:本文介绍了一种单目视觉惯性系统(VINS),用于在各种环境中进行状态估计。单目相机和低成本惯性测量单元(IMU)构成了六自由度状态估计的最小传感器套件。我们的算法通过有界滑动窗口迭代地优化视觉和惯性测量,以实现精确的状态估计。视觉结构是通过滑动窗口中的关键帧来维护的,而惯性度量则是通过关键帧之间的预积分来保持的。我们的系统对于未知状态的初始化、在线相机-IMU外参校准、基于球面定义的统一重投影误差、环路检测和四自由度位姿图优化都具有鲁棒性。我们通过与其他最先进的算法进行比较,在公共数据集和真实世界实验中验证了我们系统的性能。我们还在MAV平台上进行了闭环自主飞行,并将算法移植到了iOS应用程序中。我们强调,所提出的工作是一个可靠和完整的系统,可以轻松地在其他智能平台上操作。我们公开了我们的实现代码和一个增强现实(AR)应用程序在iOS移动设备上。

1、概览

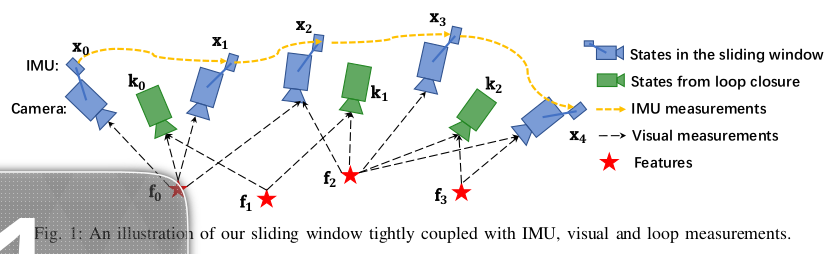

所提出的视觉惯性系统的结构如图1所示。

第一部分是测量处理前端,它会为每个新的图像帧提取和跟踪特征,并在两个帧之间预积分所有的IMU数据。第二部分是初始化过程,它提供了必要的初始值(姿态、速度、重力向量、陀螺仪偏差和三维特征位置),以启动非线性系统。第三部分实现非线性图优化,通过优化所有视觉、惯性信息来解决我们滑动窗口中的状态。另一个在另一个线程中运行的部分负责闭环检测和姿态图优化。

符号说明:我们将(·)w 视为世界坐标系,其中重力向量沿着 z 轴。 (·)b 是与 IMU 坐标系对齐的机体坐标系。 (·)c 是相机坐标系。我们使用R和四元数q来表示旋转矩阵。四元数对应于哈密尔顿符号。我们在状态中使用四元数,而在某些方程中,我们使用其对应的旋转矩阵来乘以向量。q wb,pwb是从机体坐标系到世界坐标系的旋转和平移变换。bk是拍摄第k张图像时的机体坐标系,ck是拍摄第k张图像时的相机坐标系。⊗是四元数的乘法运算符。gw = [0, 0, g]T是世界坐标系下的重力向量。

2、测量过程

在本节中,我们对视觉和惯性测量进行预处理。对于视觉测量,我们在连续帧之间跟踪特征,并检测最新帧中的新特征。对于IMU测量,我们在两个连续帧之间进行预积分。需要注意的是,IMU测量受到偏置和噪声的影响。因此,在IMU预积分和优化部分中特别考虑偏差,这对于低成本的IMU芯片至关重要。

A、视觉处理前端

对于每个新图像,使用KLT稀疏光流算法[26]跟踪现有特征。同时,检测新的角点特征[27]以保持每个图像中的最小特征数量(100-300)。该检测器通过设置相邻两个特征之间的最小像素间隔来强制执行统一的特征分布。在通过离群值拒绝之后,特征点被投影到一个单位球上。离群值拒绝是通过基础矩阵测试中的RANSAC步骤来执行的。在这一步中,还会选择关键帧。我们有两个关键帧选择标准。其中之一是平均视差。如果跟踪特征的平均视差超过某个阈值,我们将把该图像视为关键帧。请注意,不仅平移而且旋转也会导致视差;然而,在仅旋转运动中无法三角化特征。为了避免这种情况,我们在计算视差时使用IMU传播结果来补偿旋转。另一个标准是跟踪质量。如果跟踪的特征数量低于某个阈值,我们也将该帧视为关键帧。

B、IMU预积分

我们通过加入IMU偏差校正来扩展我们先前工作中提出的IMU预积分方法[7]。与[28]相比,我们在连续时间动力学中推导出噪声传播。此外,IMU预积分结果还用于初始化过程,以校准初始状态。

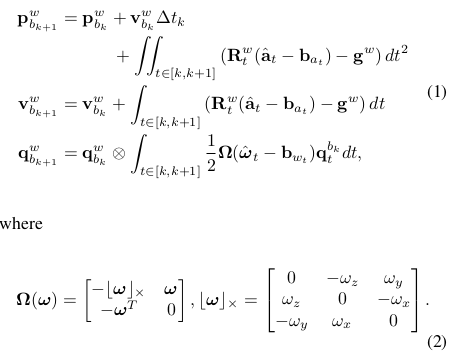

假设有两个时间瞬间对应于图像帧 b_k 和 b_{k+1},在时间间隔 [k, k+1] 内,状态变量受惯性测量的约束限制。

∆t k 是时间间隔 [k, k + 1] 的持续时间。ω̂ t 和 ât 是原始的IMU测量值,它们在本体坐标系中,并受到加速度计偏差 ba、陀螺仪偏差 bw 和噪声的影响。

可以看出,IMU状态传播需要b k 帧的旋转、位置和速度。当这些起始状态发生变化时,我们需要重新传播IMU测量值。特别是在基于优化的算法中,每次调整姿势时,我们都需要重新传播它们之间的IMU测量值。这种传播策略会消耗大量计算资源。为了避免重新传播,我们采用预积分算法。

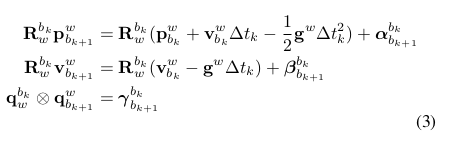

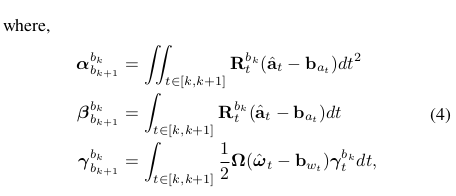

将IMU传播的参考系改为局部参考系bk后,我们只能预积分与线性加速度â和角速度ω̂相关的部分,具体如下:

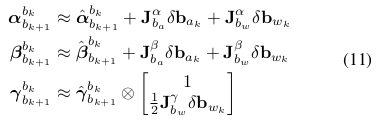

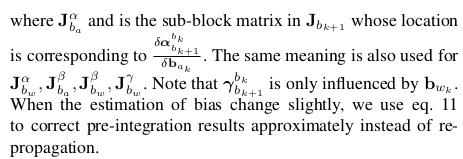

"γbkbk" 是初始的单位四元数。可以看出,通过将 bk 作为基础框架,仅使用 IMU 测量值就可以获得预积分部分(4)。αbkbk+1,βbkbk+1和γbkbk+1仅与IMU偏差有关,而与bk和bk+1中的其他状态无关。在开始时,加速度计偏差和陀螺仪偏差都为零。当偏差的估计值发生变化时,如果变化很小,我们通过对偏差的一阶近似进行调整 αbkbk+1,βbkbk+1和γbkbk+1,否则我们进行重新传播。这种策略为基于优化的算法节省了大量的计算资源,因为我们不需要一遍又一遍地传播IMU测量值。

在离散时间实现中,可以应用各种数值积分方法,如欧拉法、中点法、RK4积分等。这里选择欧拉法来演示过程以便易于理解(我们在实现代码中使用中值法)。

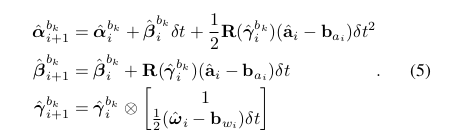

一开始,αbkbk,βbkbk为0,γbkbk为单位四元数,表示单位矩阵。均值的传播步骤如下:

i和i+1是对应于[k,k+1]内的两个IMU测量的两个离散时刻。δt是IMU测量i和i+1之间的时间间隔。然后我们处理协方差传播。假设加速度计和陀螺仪测量中的噪声是高斯白噪声,na ∼ N (0, σ2a ),nw ∼ N (0, σ2w )。加速度计偏置和陀螺仪偏置是随机游走,其导数是高斯白噪声,nba ~ N(0, σ2ba),nbw ~ N(0, σ2bw)。

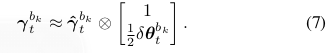

由于γbkt 是过参数化的,我们定义其误差项为扰动。

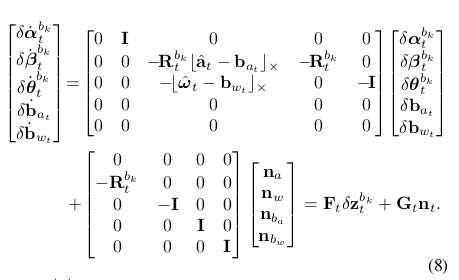

我们可以从公式4和公式6推导出误差项的连续时间线性化动力学。

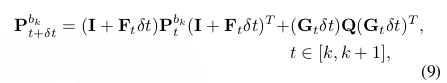

其中,b·c × 是叉积矩阵运算。有关四元数误差状态表示的详细信息可以在文献[29]中找到。Pbkbk+1 可以通过一阶离散时间协方差更新递归计算,初始协方差为 Pbkbk = 0。

其中δt是两个IMU测量之间的时间,Q是噪声的对角协方差矩阵(σ2a,σ2w,σ2ba,σ2bw)。

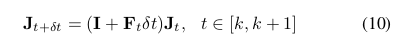

同时,关于 δzbkbk,δzbkbk+1 的一阶雅可比矩阵 Jbk+1 也可以通过递归计算得出,其中初始雅可比矩阵 Jbk = I。

以递归的方式,我们得到协方差矩阵Pbkbk+1 和雅可比矩阵Jbk+1。关于偏差的一阶近似αbkbk+1,βbkbk+1和γbkbk+1可以写成:

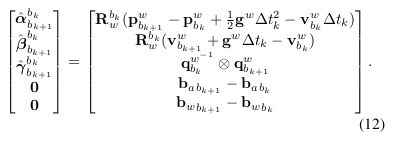

现在我们能够编写带有相应协方差的IMU测量模型Pbkbk+1。

3、初始化

R EFERENCES

[1] G. Klein and D. Murray, “Parallel tracking and mapping for small ar

workspaces,” in Mixed and Augmented Reality, 2007. ISMAR 2007. 6th

IEEE and ACM International Symposium on. IEEE, 2007, pp. 225–234.

[2] C. Forster, M. Pizzoli, and D. Scaramuzza, “SVO: Fast semi-direct

monocular visual odometry,” in Proc. of the IEEE Int. Conf. on Robot.

and Autom., Hong Kong, China, May 2014.

[3] J. Engel, T. Schöps, and D. Cremers, “Lsd-slam: Large-scale di-

rect monocular slam,” in European Conference on Computer Vision.

Springer International Publishing, 2014, pp. 834–849.

[4] R. Mur-Artal, J. Montiel, and J. D. Tardos, “Orb-slam: a versatile

and accurate monocular slam system,” IEEE Transactions on Robotics,

vol. 31, no. 5, pp. 1147–1163, 2015.

[5] J. Engel, V. Koltun, and D. Cremers, “Direct sparse odometry,” IEEE

Transactions on Pattern Analysis and Machine Intelligence, 2017.

[6] S. Shen, Y. Mulgaonkar, N. Michael, and V. Kumar, “Initialization-

free monocular visual-inertial estimation with application to autonomous

MAVs,” in Proc. of the Int. Sym. on Exp. Robot., Marrakech, Morocco,

2014.

[7] S. Shen, N. Michael, and V. Kumar, “Tightly-coupled monocular visual-

inertial fusion for autonomous flight of rotorcraft MAVs,” in Proc. of

the IEEE Int. Conf. on Robot. and Autom., Seattle, WA, May 2015.

[8] M. Faessler, F. Fontana, C. Forster, and D. Scaramuzza, “Automatic re-

initialization and failure recovery for aggressive flight with a monocular

vision-based quadrotor,” in Proc. of the IEEE Int. Conf. on Robot. and

Autom. IEEE, 2015, pp. 1722–1729.

[9] Z. Yang and S. Shen, “Monocular visual–inertial state estimation with

online initialization and camera–imu extrinsic calibration,” IEEE Trans-

actions on Automation Science and Engineering, vol. 14, no. 1, pp.

39–51, 2017.

[10] M. Bloesch, S. Omari, M. Hutter, and R. Siegwart, “Robust visual

inertial odometry using a direct ekf-based approach,” in Proc. of the

IEEE/RSJ Int. Conf. on Intell. Robots and Syst. IEEE, 2015, pp. 298–

304.

[11] S. Leutenegger, S. Lynen, M. Bosse, R. Siegwart, and P. Furgale,

“Keyframe-based visual-inertial odometry using nonlinear optimization,”

Int. J. Robot. Research, vol. 34, no. 3, pp. 314–334, Mar. 2014.

[12] Y. Ling, T. Liu, and S. Shen, “Aggressive quadrotor flight using dense

visual-inertial fusion,” in Proc. of the IEEE Int. Conf. on Robot. and

Autom. IEEE, 2016, pp. 1499–1506.

[13] V. Usenko, J. Engel, J. Stückler, and D. Cremers, “Direct visual-inertial

odometry with stereo cameras,” in Proc. of the IEEE Int. Conf. on Robot.

and Autom. IEEE, 2016, pp. 1885–1892.

[14] A. S. Huang, A. Bachrach, P. Henry, M. Krainin, D. Maturana, D. Fox,

and N. Roy, “Visual odometry and mapping for autonomous flight using

an RGB-D camera,” in Proc. of the Int. Sym. of Robot. Research,

Flagstaff, AZ, Aug. 2011.

[15] A. I. Mourikis and S. I. Roumeliotis, “A multi-state constraint Kalman

filter for vision-aided inertial navigation,” in Proc. of the IEEE Int. Conf.

on Robot. and Autom., Roma, Italy, Apr. 2007, pp. 3565–3572.

[16] J. Kelly and G. S. Sukhatme, “Visual-inertial sensor fusion: Localization,

mapping and sensor-to-sensor self-calibration,” Int. J. Robot. Research,

vol. 30, no. 1, pp. 56–79, Jan. 2011.

[17] J. A. Hesch, D. G. Kottas, S. L. Bowman, and S. I. Roumeliotis, “Con-

sistency analysis and improvement of vision-aided inertial navigation,”

IEEE Trans. Robot., vol. 30, no. 1, pp. 158–176, Feb. 2014.

[18] S. Lynen, M. W. Achtelik, S. Weiss, M. Chli, and R. Siegwart, “A robust

and modular multi-sensor fusion approach applied to mav navigation,”

in Proc. of the IEEE/RSJ Int. Conf. on Intell. Robots and Syst. IEEE,

2013, pp. 3923–3929.

[19] M. Li and A. Mourikis, “High-precision, consistent EKF-based visual-

inertial odometry,” Int. J. Robot. Research, vol. 32, no. 6, pp. 690–711,

May 2013.

[20] G. Sibley, L. Matthies, and G. Sukhatme, “Sliding window filter with

application to planetary landing,” J. Field Robot., vol. 27, no. 5, pp.

587–608, Sep. 2010.

[21] C. Forster, L. Carlone, F. Dellaert, and D. Scaramuzza, “IMU prein-

tegration on manifold for efficient visual-inertial maximum-a-posteriori

estimation,” in Proc. of Robot.: Sci. and Syst., Rome, Italy, Jul. 2015.

[22] A. Martinelli, “Closed-form solution of visual-inertial structure from

motion,” Int. J. Comput. Vis., vol. 106, no. 2, pp. 138–152, 2014.

[23] J. Kaiser, A. Martinelli, F. Fontana, and D. Scaramuzza, “Simultaneous

state initialization and gyroscope bias calibration in visual inertial aided

navigation,” IEEE Robotics and Automation Letters, vol. 2, no. 1, pp.

18–25, 2017.

[24] D. Gálvez-López and J. D. Tardós, “Bags of binary words for fast

place recognition in image sequences,” IEEE Transactions on Robotics,

vol. 28, no. 5, pp. 1188–1197, October 2012.

[25] H. Strasdat, J. Montiel, and A. J. Davison, “Scale drift-aware large scale

monocular slam,” Robotics: Science and Systems VI, 2010.

[26] B. D. Lucas and T. Kanade, “An iterative image registration technique

with an application to stereo vision,” in Proc. of the Intl. Joint Conf. on

Artificial Intelligence, Vancouver, Canada, Aug. 1981, pp. 24–28.

[27] J. Shi and C. Tomasi, “Good features to track,” in Computer Vision

and Pattern Recognition, 1994. Proceedings CVPR’94., 1994 IEEE

Computer Society Conference on. IEEE, 1994, pp. 593–600.

[28] C. Forster, L. Carlone, F. Dellaert, and D. Scaramuzza, “On-manifold

preintegration for real-time visual-inertial odometry,” IEEE Transactions

on Robotics (TRO), 2016.

[29] N. Trawny and S. I. Roumeliotis, “Indirect kalman filter for 3d attitude

estimation,” University of Minnesota, Dept. of Comp. Sci. & Eng., Tech.

Rep, vol. 2, p. 2005, 2005.

[30] A. Heyden and M. Pollefeys, “Multiple view geometry,” Emerging

Topics in Computer Vision, 2005.

[31] D. Nistér, “An efficient solution to the five-point relative pose problem,”

IEEE transactions on pattern analysis and machine intelligence, vol. 26,

no. 6, pp. 756–770, 2004.

[32] B. Triggs, P. F. McLauchlan, R. I. Hartley, and A. W. Fitzgibbon,

“Bundle adjustmenta modern synthesis,” in International workshop on

vision algorithms. Springer, 1999, pp. 298–372.

[33] S. Agarwal, K. Mierle, and Others, “Ceres solver,” http://ceres-solver.

org.

[34] M. Calonder, V. Lepetit, C. Strecha, and P. Fua, “Brief: Binary robust

independent elementary features,” Computer Vision–ECCV 2010, pp.

778–792, 2010.

[35] E. Rosten and T. Drummond, “Machine learning for high-speed corner

detection,” Computer vision–ECCV 2006, pp. 430–443, 2006.

[36] M. Burri, J. Nikolic, P. Gohl, T. Schneider, J. Rehder, S. Omari, M. W.

Achtelik, and R. Siegwart, “The euroc micro aerial vehicle datasets,”

The International Journal of Robotics Research, 2016.

[37] C. Mei and P. Rives, “Single view point omnidirectional camera cal-

ibration from planar grids,” in Robotics and Automation, 2007 IEEE

International Conference on. IEEE, 2007, pp. 3945–3950.

[38] L. Heng, B. Li, and M. Pollefeys, “Camodocal: Automatic intrinsic

and extrinsic calibration of a rig with multiple generic cameras and

odometry,” in Intelligent Robots and Systems (IROS), 2013 IEEE/RSJ

International Conference on. IEEE, 2013, pp. 1793–1800.

- Visual-Inertial VINS-Mono Estimator Monocular Versatilevisual-inertial vins-mono estimator monocular visual-inertial calibration monocular inertial vins-mono estimator笔记vins versatile visual-inertial estimator convolutions transformer prediction versatile monocular monocular auxiliary detection learning vins-mono