train

【找到 Anchor-based and Anchor-free 性能差距的本质】Adaptive Training Sample Selection (ATSS) 论文精读

原始题目:Bridging the Gap Between Anchor-based and Anchor-free Detection via Adaptive Training Sample Selection 中文翻译:通过 自适应训练样本选择 缩小 Anchor-based and Anch ......

GraphPrompt: Unifying Pre-Training and Downstream Tasks for Graph Neural Networks

目录概符号说明GraphPrompt代码 Liu Z., Yu X., Fang Y. and Zhang X. GraphPrompt: Unifying pre-training and downstream tasks for graph neural networks. WWW, 2023. ......

GPT-GNN: Generative Pre-Training of Graph Neural Networks

目录概符号说明GPT-GNN代码 Hu Z., Dong Y., Wang K., Chang K. and Sun Y. GPT-GNN: Generative pre-training of graph neural networks. KDD, 2020. 概 比较早的一篇图预训练模型. 符号 ......

CF814B An express train to reveries

思维好题,保证有解大大降低了代码难度。 显然最多有两个位置不同,不然根据鸽巢原理一定有一个序列不同位置超过一个。 然后大力分类讨论: 仅有一个位置不同。此时其余位置与排列相同,否则一定有一个序列不同位置超过一个。然后将没有用过的那个数丢到这个位置即可。 有两个位置不同。此时其余位置显然也与排列相同。 ......

学习笔记425—train_test_split 函数介绍

train_test_split 函数介绍 在机器学习中,我们通常将原始数据按照比例分割为“测试集”和“训练集”,从 sklearn.model_selection 中调用train_test_split 函数 简单用法如下: X_train,X_test, y_train, y_test =skl ......

Training language models to follow instructions with human feedback

郑重声明:原文参见标题,如有侵权,请联系作者,将会撤销发布! NeurIPS 2022 ......

train the model model.fit

#train the model history = model.fit(x_train, y_train, batch_size=32, epochs=100, validation_split=0.1, shuffle=True, class_weight=class_weights, call ......

Proj CDeepFuzz Paper Reading: SparseProp: Efficient Sparse Backpropagation for Faster Training of Neural Networks

## Abstract 本文:SparseProp Github: https://github.com/IST-DASLab/sparseprop Task: a back-propagation algo for sparse training data, a fast vectorized i ......

论文解读(CST)《Cycle Self-Training for Domain Adaptation》

Note:[ wechat:Y466551 | 可加勿骚扰,付费咨询 ] 论文信息 论文标题:Cycle Self-Training for Domain Adaptation论文作者:Hong Liu, Jianmin Wang, Mingsheng Long论文来源:2021 论文地址:down ......

Proj CDeepFuzz Paper Reading: PELICAN: Exploiting Backdoors of Naturally Trained Deep Learning Models In Binary Code Analysis

## Abstract 背景: 1. 本文研究的不是被恶意植入的后门,而是products of defects in training 2. 攻击模式: injecting some small fixed input pattern(backdoor) to induce misclassifi ......

Proj CDeepFuzz Paper Reading: Natural attack for pre-trained models of code

## Abstract 背景:目前大多数的adversarial attack method on pre-trained models of code忽略了perturbations should be natural to human judges(naturalness requirement ......

论文解读(MTEM)《Meta-Tsallis-Entropy Minimization: A New Self-Training Approach for Domain Adaptation on Text Classification》

Note:[ wechat:Y466551 | 可加勿骚扰,付费咨询 ] 论文信息 论文标题:Meta-Tsallis-Entropy Minimization: A New Self-Training Approach for Domain Adaptation on Text Classific ......

print ("标签为" + str(train_set_y[:, index]) + ", 这是一个'" + classes[np.squeeze(train_set_y[:, index])].decode("utf-8") + "' 图片.")

这行代码使用 print 函数来输出一条信息。信息的内容是由多个字符串拼接而成的,其中包括 train_set_y 数组中指定索引处的值和 classes 数组中指定索引处的值。 首先,"标签为" 是一个字符串字面量。接下来,str(train_set_y[:, index]) 表示获取 train ......

train_set_y_orig = train_set_y_orig.reshape((1, train_set_y_orig.shape[0]))

这行代码的作用是将 train_set_y_orig 数组重新调整为一个新的形状,并将其赋值回 train_set_y_orig 变量。 首先,train_set_y_orig.shape[0] 表示获取 train_set_y_orig 数组的第一维大小。接下来,(1, train_set_y_o ......

train_dataset = h5py.File('datasets/train_catvnoncat.h5', "r")

这行代码的作用是使用 h5py 库中的 File 函数打开一个 HDF5 文件,并将其赋值给变量 train_dataset。 首先,'datasets/train_catvnoncat.h5' 是 HDF5 文件的路径。接下来,"r" 表示以只读模式打开该文件。最后,h5py.File() 函数打 ......

train_set_x_orig = np.array(train_dataset["train_set_x"][:])

这行代码的作用是将 train_dataset 字典中的 "train_set_x" 键对应的值转换为一个 NumPy 数组,并将其赋值给变量 train_set_x_orig。 首先,train_dataset["train_set_x"] 表示从 train_dataset 字典中获取键为 "t ......

Proj CDeepFuzz Paper Reading: An Extensive Study on Pre-trained Models for Program Understanding and Generation

## Abstract ## 1. Intro ## 2. Background ### 2.1 Program Understanding and Generation Tasks ### 2.2 NL-PL Pre-Trained Models 《PERL: Pivot-based Domain Adaptation for Pre-trained Deep Contextualized Embedding Models》

Note:[ wechat:Y466551 | 可加勿骚扰,付费咨询 ] 论文信息 论文标题:PERL: Pivot-based Domain Adaptation for Pre-trained Deep Contextualized Embedding Models论文作者:Eyal Ben-D ......

Training Your Own LoRAs

https://tfwol.github.io/text-generation-webui/Training-LoRAs.html#format-files text-generation-webui Training Your Own LoRAs The WebUI seeks to make t ......

精进语言模型:探索LLM Training微调与奖励模型技术的新途径

# 精进语言模型:探索LLM Training微调与奖励模型技术的新途径 LLMs Trainer 是一个旨在帮助人们从零开始训练大模型的仓库,该仓库最早参考自 [Open-Llama](https://github.com/beichao1314/Open-Llama),并在其基础上进行扩充。 有 ......

Mixture-of-Domain-Adapters: Decoupling and Injecting Domain Knowledge to Pre-trained Language Mod...

### 1. Abstract 经过预训练的语言模型(PLM)表现出在通用领域理解文本的出色能力,同时在特定领域中表现不佳。**尽管在大型领域特定语料库上继续预训练是有效的,但调整领域上的所有参数是昂贵的**。在本文中,我们研究了是否可以通过只调整几个参数来有效地调整PLM。具体来说,我们将Tran ......

论文解读(SentiX)《SentiX: A Sentiment-Aware Pre-Trained Model for Cross-Domain Sentiment Analysis》

Note:[ wechat:Y466551 | 可加勿骚扰,付费咨询 ] 论文信息 论文标题:SentiX: A Sentiment-Aware Pre-Trained Model for Cross-Domain Sentiment Analysis论文作者:Jie Zhou, Junfeng T ......

制作catvsdog_path_dataset.tfrecords的代码 数据集制作完成路径为: E:\catanddog\train1\catvsdog_path_dataset.tfrecords

# -*- coding:utf-8 -*- -##PROJECT_NAME:081200#Name:01#Author:GG#Date:2023/8/12import tensorflow as tfimport osimport numpy as npimport cv2file_dir = " ......

论文解读(TAT)《 Transferable Adversarial Training: A General Approach to Adapting Deep Classifiers》

Note:[ wechat:Y466551 | 可加勿骚扰,付费咨询 ] 论文信息 论文标题:Transferable Adversarial Training: A General Approach to Adapting Deep Classifiers论文作者:Hong Liu, Mingsh ......

UESTC 2023 Summer Training #23 for div2/2022-2023 ACM-ICPC Latin American Regional Programming Contest

# Preface 今天这场签到巨多,和昨天那场形成了鲜明的对比 但可惜后盘的时候我划了太久的水,最后接了B题然后没调出来成为战俘 最气的是赛后发现原来是没注意输出格式,本来可以说一遍过的题结果没写过,属实可惜,就当长教训了 **以后一定要尤其注意输入输出格式** # A. Asking for M ......

training acc比test acc小的情况

今天跑实验遇到了training acc比test acc小的情况,查找了一些资料之后发现有以下一些可能: 1. 使用了dropout,在训练的时候使用了dropout, 但是在test的时候其实没有dropout了。 2. learning rate太大了,(我就属于这个情况) 3. 数据集太小了 ......

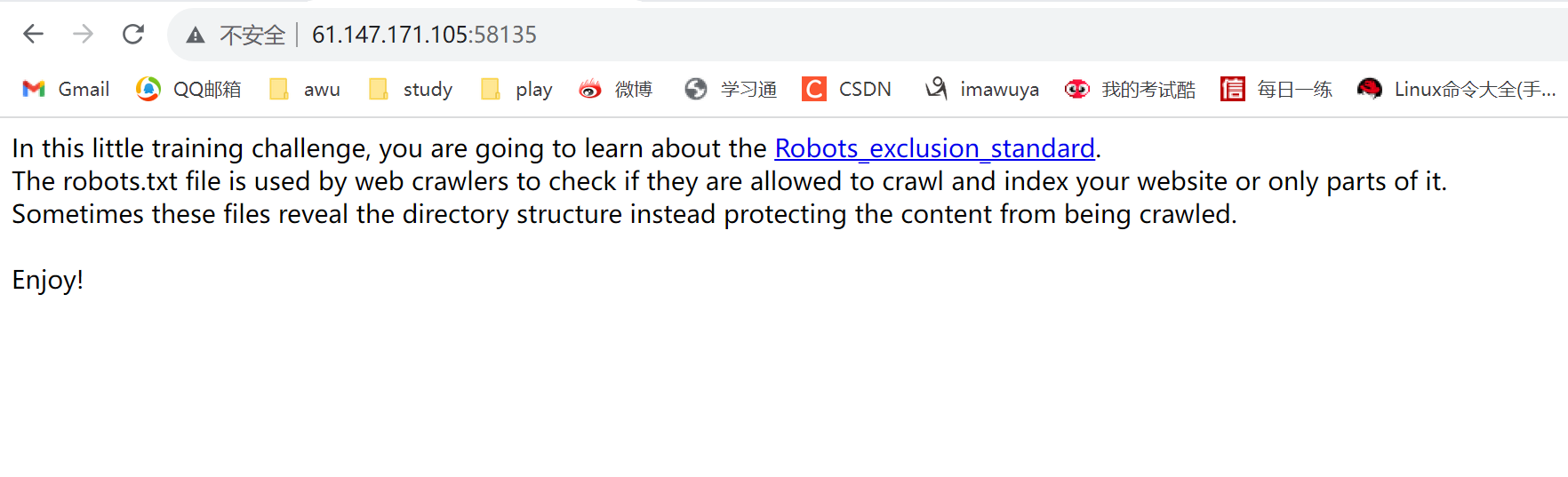

【攻防世界】-Training-WWW-Robots

# 信息收集  翻译: 在这个小小的训练挑战中,你将学习机器人的排除标准。robots.txt文件用于网络 ......

A Novel Noise Injection-based Training Scheme for Better Model Robustness

郑重声明:原文参见标题,如有侵权,请联系作者,将会撤销发布! https://arxiv.org/abs/2302.10802 ......

题解 CF1501A 【Alexey and Train】

posted on 2021-03-13 21:57:02 | under 题解 | [source](https://www.luogu.com.cn/blog/_post/319230) 简单模拟题,考验选手的读题能力~~和使用谷歌翻译的能力~~。 先定义一个 $now=0$,我们最后算出来的结 ......