Reinforcement

强化学习研究方向(研究领域)现有的不足(短板、无法落地性) —— Why You (Probably) Shouldn’t Use Reinforcement Learning

外文原文: Why You (Probably) Shouldn’t Use Reinforcement Learning 地址: https://towardsdatascience.com/why-you-shouldnt-use-reinforcement-learning-163bae193 ......

《Visual Analytics for RNN-Based Deep Reinforcement Learning》

摘要 准备开题报告,整理一篇 2022 年TOP 论文。 论文介绍 该论文是一篇 2022 年,有关可视化分析基于RNN 的深度强化学习训练过程的文章。一作是 Junpeng Wang ,作者主要研究领域就是:visualization, visual analytics, explainable ......

Can Pre-Trained Text-to-Image Models Generate Visual Goals for Reinforcement Learning

概述 Learning form the Void (LfVoid) 根据给定的language instruction对observation进行appearance-based and structure-based修改得到goal images,为RL提供奖励信号。提升了example-bas ......

【略读论文|时序知识图谱补全】DREAM: Adaptive Reinforcement Learning based on Attention Mechanism for Temporal Knowledge Graph Reasoning

会议:SIGIR,时间:2023,学校:苏州大学计算机科学与技术学院,澳大利亚昆士兰布里斯班大学信息技术与电气工程学院,Griffith大学金海岸信息通信技术学院 摘要: 原因:现在的时序知识图谱推理方法无法生成显式推理路径,缺乏可解释性。 方法迁移:由于强化学习 (RL) 用于传统知识图谱上的多跳 ......

Reinforcement Learning Chapter 1

本文参考《Reinforcement Learning:An Introduction(2nd Edition)》Sutton. 强化学习是什么 传统机器学习方法可分为有监督与无监督两类; 有监督学习 > 任务驱动 无监督学习 > 数据驱动 强化学习则可看作机器学习的“第三范式” > 模拟驱动,具体 ......

TRL(Transformer Reinforcement Learning) PPO Trainer 学习笔记

(1) PPO Trainer TRL支持PPO Trainer通过RL训练语言模型上的任何奖励信号。奖励信号可以来自手工制作的规则、指标或使用奖励模型的偏好数据。要获得完整的示例,请查看examples/notebooks/gpt2-sentiment.ipynb。Trainer很大程度上受到了原 ......

Introduction of Deep Reinforcement Learning

Reading Notes about the book Deep Reinforcement Learning written by Aske Plaat Recently, I have been reading the book Deep Reinforcement Learning writ ......

Tabular Value-Based Reinforcement Learning

Reading Notes about the book Deep Reinforcement Learning written by Aske Plaat Recently, I have been reading the book Deep Reinforcement Learning writ ......

Reinforcement Learning 学习笔记 1

什么是强化学习(reinforcement learning)? 假设一个场景,一个智能体(agent) 和环境(env)交互,智能体基于当前环境\(S_t\)每产生一个动作\(A_t\),环境便给它一个反馈,也被称为奖励(reward)\(R_{t+1}\), 随后,智能体的状态变为\(S_{t+ ......

Pink Noise Is All You Need: Colored Noise Exploration in Deep Reinforcement Learning

郑重声明:原文参见标题,如有侵权,请联系作者,将会撤销发布! Published as a conference paper at ICLR 2023 ABSTRACT ......

Efficient Off-Policy Meta-Reinforcement Learning via Probabilistic Context Variables

郑重声明:原文参见标题,如有侵权,请联系作者,将会撤销发布! Proceedings of the 36th International Conference on Machine Learning, PMLR 97:5331-5340, 2019 ......

Meta-Reinforcement Learning of Structured Exploration Strategies

郑重声明:原文参见标题,如有侵权,请联系作者,将会撤销发布! NeurIPS 2018 ......

《Decision Transformer: Reinforcement Learning via Sequence Modeling》论文学习

一、Introduction 先前的研究工作表明,Transformer可以对处于高维分布的语义概念进行大规模建模抽象,比较典型地体现如: 基于自然语言的零样本泛化(zero-shot generalization) 分布外图像生成(out-of-distribution image generat ......

Improved deep reinforcement learning for robotics through distribution-based experience retention

**发表时间:**2016(IROS 2016) **文章要点:**这篇文章提出了experience repl ......

The importance of experience replay database composition in deep reinforcement learning

**发表时间:**2015(Deep Reinforcement Learning Workshop, NIPS ......

概述增强式学习(Reinforcement Learning)

概述增强式学习(Reinforcement Learning) Supervised Learning(自监督学习):告诉机器输入和输出,用有标注的训练资料训练出的Network Reinforcement Learning(增强式学习):给机器一个输入,我们不知道最佳输出是什么(适用于标注困难或者 ......

Unified Conversational Recommendation Policy Learning via Graph-based Reinforcement Learning

图的作用: 图结构捕捉不同类型节点(即用户、项目和属性)之间丰富的关联信息,使我们能够发现协作用户对属性和项目的偏好。因此,我们可以利用图结构将推荐和对话组件有机地整合在一起,其中对话会话可以被视为在图中维护的节点序列,以动态地利用对话历史来预测下一轮的行动。 由四个主要组件组成:基于图的 MDP ......

粗读Multi-Task Recommendations with Reinforcement Learning

论文: Multi-Task Recommendations with Reinforcement Learning 地址: https://arxiv.org/abs/2302.03328 # 摘要 In recent years, Multi-task Learning (MTL) has yi ......

Regret Minimization Experience Replay in Off-Policy Reinforcement Learning

**发表时间:**2021 (NeurIPS 2021) **文章要点:**理论表明,更高的hindsight TD error,更加on policy,以及更准的target Q value的样本应该有更高的采样权重(The theory suggests that data with highe ......

Effective Diversity in Population-Based Reinforcement Learning

**发表时间:**2020 (NeurIPS 2020) **文章要点:**这篇文章提出了Diversity v ......

Spectrum Random Masking for Generalization in Image-based Reinforcement Learning

郑重声明:原文参见标题,如有侵权,请联系作者,将会撤销发布! ......

Faster sorting algorithms discovered using deep reinforcement learning

## 摘要: - `AlphaDev`模型优化排序算法,将排序算法提速70%。通过强化学习,AlphaDev发现了更加有效的算法,直接超越了科学家和工程师们几十年来的精心打磨。现在,新的算法已经成为两个标准C++编码库的一部分,每天都会被全球的程序员使用数万亿次。 ## 介绍 - 优化目标为排序算法 ......

Reinforcement learning

如图1所示,强化学习中,state是环境的状态,就是observation。 图1 强化学习 一、Policy based approach learning an actor The policy based approach is to learn an actor (agent or poli ......

Reward Modelling(RM)and Reinforcement Learning from Human Feedback(RLHF)for Large language models(LLM)技术初探

Reward Modelling(RM)and Reinforcement Learning from Human Feedback(RLHF)for Large language models(LLM)技术初探 ......

Reinforcement Learning之Q-Learning - Python实现

- **算法特征** ①. 以真实reward训练Q-function; ②. 从最大Q方向更新policy $\pi$ - **算法推导** **Part Ⅰ: RL之原理** 整体交互流程如下, 定义策略函数(policy)$\pi$, 输入为状态(state)$s$, 输出为动作(action ......

April 2023-Memory-efficient Reinforcement Learning with Value-based Knowledge Consolidation

本文基于深度q网络算法提出了记忆高效的强化学习算法来缓解这一问题。通过将目标q网络中的知识整合Knowledge Consolidation到当前q网络中,所提算法减少了遗忘并保持了较高的样本效率。 ......

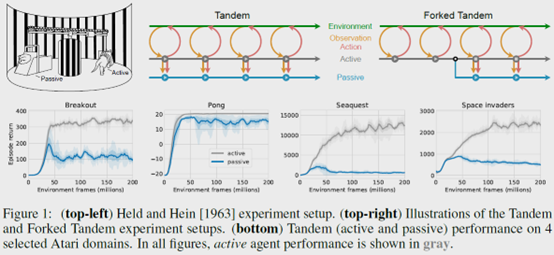

The Difficulty of Passive Learning in Deep Reinforcement Learning

**发表时间:**2021(NeurIPS 2021) **文章要点:**这篇文章提出一个tandem learni ......

Off-Policy Deep Reinforcement Learning without Exploration

**发表时间:**2019(ICML 2019) **文章要点:**这篇文章想说在offline RL的setting下,由于外推误差(extrapolation errors)的原因,标准的off-policy算法比如DQN,DDPG之类的,如果数据的分布和当前policy的分布差距很大的话,那就 ......

Jan 2023-Prioritizing Samples in Reinforcement Learning with Reducible Loss

#1 Introduction 本文建议根据样本的可学习性进行抽样,而不是从经验回放中随机抽样。如果有可能减少代理对该样本的损失,则认为该样本是可学习的。我们将可以减少样本损失的数量称为其可减少损失(ReLo)。这与Schaul等人[2016]的vanilla优先级不同,后者只是对具有高损失的样本给 ......

论文阅读笔记《Training Socially Engaging Robots Modeling Backchannel Behaviors with Batch Reinforcement Learning》

Training Socially Engaging Robots Modeling Backchannel Behaviors with Batch Reinforcement Learning 训练社交机器人:使用批量强化学习对反馈信号行为进行建模 发表于TAC 2022。 Hussain N, ......